AI governance

Trend 11: Adherence to AI ethics as a underlying principle to build AI systems

With the adoption of AI systems increasing in critical decision-making systems, the outcomes rendered by these systems become critical. In the recent past, there have been examples where the outcomes were wrong and impacted important human issues, some of the examples being an AI hiring algorithm found to be biased against specific races and gender. Prison sentences had twice as high a false positive rate for black defendants as for white defendants. A car insurance company by default classified males under 25 years as reckless drivers.

AI started attracting a lot of negative press because of failing systems, the resulting lawsuits and other societal implications, to the point that today regulators, official bodies and general users are seeking more and more transparency of every decision made by AI-based systems. In the U.S., insurance companies need to explain their rates and coverage decisions, while the EU introduced the right to explanation in the General Data Protection Regulation.

All the above scenarios call for tools and techniques to make AI systems more transparent and interpretable.

Following are some of the important principles required for operating an ethical AI system. To ensure trust and reliability in AI models, it is essential to adhere to some key principles:

- Human involvement: Though AI models are built to operate independently without human interference, human dependency is a necessity in some cases. For example, in fraud detection or cases where law enforcement is involved, we need some human supervision in the loop to check or review decisions made by AI models from time to time.

- Bias detection: An unbiased dataset is an important prerequisite for an AI model to make reliable and nondiscriminatory predictions. AI models are being used for credit scoring by banks, resume shortlisting and in some judicial systems; however, it has been noticed that in some cases, the datasets had some inherent bias in them for color, age and sex.

- Explainability: Explainable AI comes into the picture when we talk about justifiable predictions and feature importance. Explainable AI helps in understanding how the model is thinking or which features of the given input it is emphasizing while making predictions.

- Reproducibility: The machine learning model should be consistent every time when giving predictions, and it should not go haywire when testing with new data.

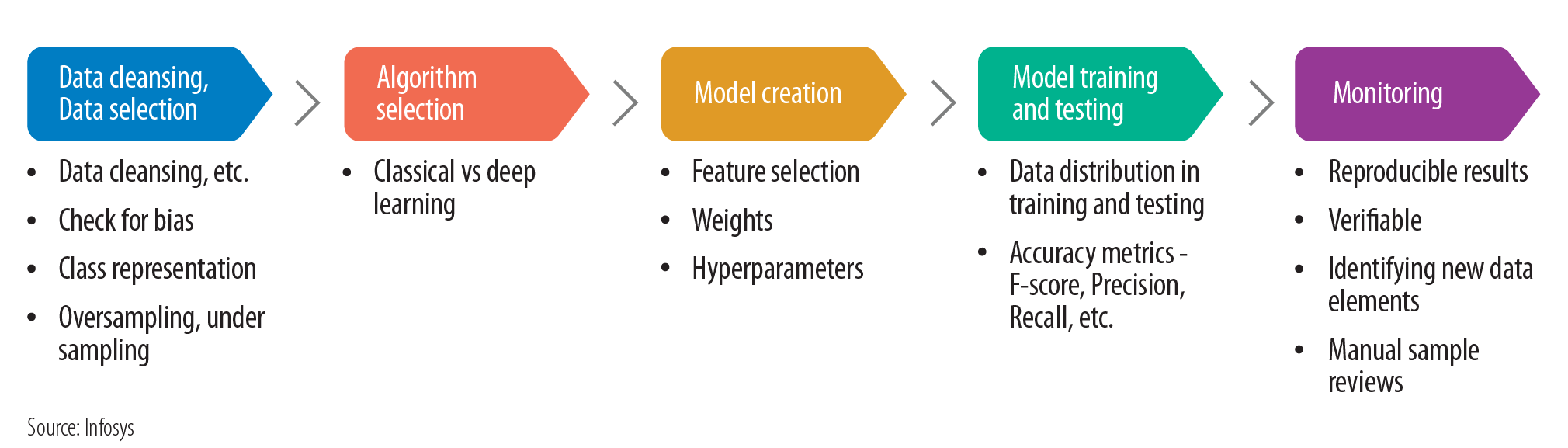

Many practitioners would mistake explainable AI (XAI) as being applied only at the output stage; however, the role of XAI is throughout the AI life cycle. The key stages where it has an important role are as follows.

XAI in the AI life cycle

Thus, AI systems need consistent and continuous governance to make them understandable and resilient in various situations, and enterprises must ensure that as part of the AI adoption and maturity cycle.

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!