Artificial Intelligence

Agentic AI Ecosystem evaluation

This white paper explores Agentic AI as a solution to the limitations of traditional AI systems by enabling autonomous, goal-driven behavior. It outlines the framework's key benefits, characteristics, and components, and provides guidance on selecting suitable agentic frameworks. The paper also discusses implementation challenges and explains the role of the Model Context Protocol (MCP) in enhancing agentic interactions.

Insights

- Traditional AI lacks autonomy and adaptability; Agentic AI addresses these gaps with goal-driven, self-directed behavior.

- Characteristics, components, and benefits of Agentic AI.

- Identify and evaluate and compare different agentic frameworks based on Core Features, Technical Capabilities and Practical Considerations.

- Detailed comparison across open and closed agentic frameworks like LangGraph, CrewAI, AWS Bedrock, Google ADK, Salesforce Agentforce etc.

- Implementation, challenges and how to resolve.

- The Role of MCP and A2A in Agentic AI world.

Introduction

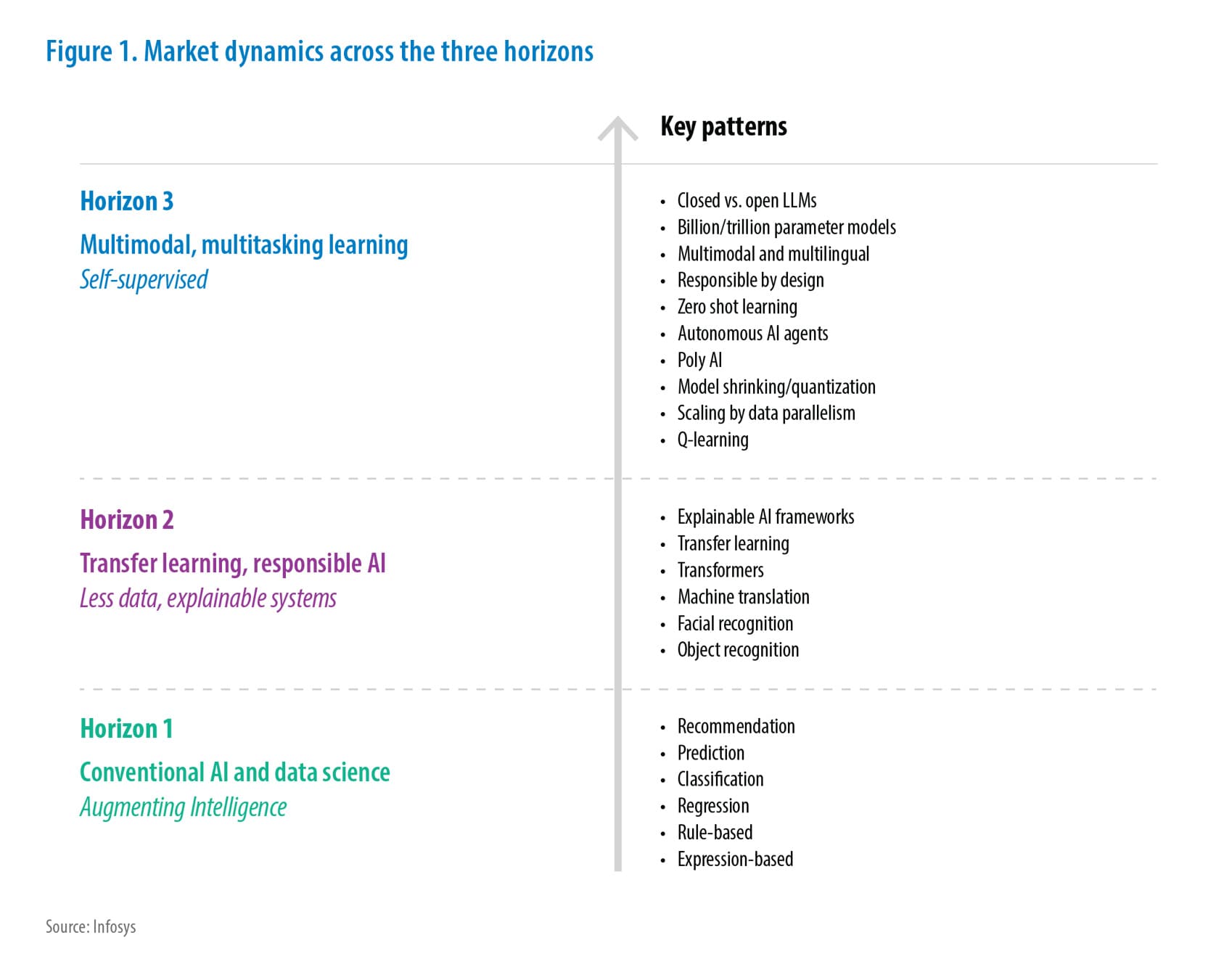

The emergence of Agentic AI marks a profound paradigm shift from traditional AI systems to advanced models capable of independent decision-making and continuous self-learning. This evolution reflects a journey through significant milestones that have progressively expanded AI’s capabilities and impact. Earlier the AI systems were deterministic in the sense that they were rule based, operating strictly on manual instructions with limited flexibility. The emergence of Machine Learning (ML) brought disruption and changed the landscape by enabling AI to understand data, recognize patterns and improve performance autonomously. This breakthrough empowered AI to tackle increasingly sophisticated tasks across diverse industries from healthcare, diagnostics to autonomous vehicles greatly enhancing its practical utility.

Building on the foundation of ML, Generative AI introduced a transformative new dimension, powered by large language models (LLMs) and advanced neural networks, Generative AI can create original content such as text, images, music, and code. This capability has unlocked creative collaboration and innovation across fields like design, entertainment, and software development, expanding AI’s role from analysis and prediction to creative generation.

Now, we stand at the threshold of the Agentic AI era the next evolutionary leap. Unlike earlier AI systems that are reactive or generative within predefined limits, Agentic AI exhibits true agency. It autonomously sets goals, strategically plans, adapts dynamically to changing environments, and proactively takes actions to achieve objectives without requiring detailed human guidance. The ultimate goal of this progression is to reach Artificial General Intelligence (AGI), which represents the future of AI, enabling systems to under-stand, learn, and apply intelligence across a wide range of tasks at a human-like level.

Current limitations with AI landscape

- Limited Autonomy and Adaptability: Most AI systems today excel at specific tasks, such as image recognition or natural language processing. However, they struggle to adapt to new situations or learn from their experiences in a truly autonomous way. They often require constant human intervention to retrain or adjust their parameters, limiting their ability to evolve and improve over time.

- Dependency on Human Oversight: Many AI applications, particularly those involved in critical decision-making, still heavily rely on human input and validation. This reliance introduces inefficiencies and increases the risk of errors, as human managers must constantly monitor and oversee AI outputs, slowing down processes and potentially introducing human biases.

- Siloed Operations: AI systems are often deployed in isolation, unable to seamlessly communicate and collaborate with other systems or respond effectively to dynamic changes in the environment. This siloed approach limits their ability to optimize business processes and achieve holistic outcomes.

- Scalability Challenges: Deploying and maintaining multiple AI systems for different business functions (e.g., customer service, fraud detection, marketing) can be complex and costly. This fragmented approach hinders the development of a unified and integrated AI ecosystem that can learn and adapt.

The Agentic AI Framework: A Solution to Challenges

Agentic AI refers to artificial intelligence systems that possess a degree of autonomy and decision-making capability, enabling them to take actions or make choices based on their environment, goals, and learned information. The key characteristic of agentic AI is that it can act independently without requiring constant direct human control or input.

Agentic AI and its benefits:

In the AI ecosystem, achieving efficiency, flexibility, and scalability is critical, particularly given the high failure rates of AI/ML models. With the recent surge in interest in GenAI, these three factors have become even more vital for businesses keen on adopting cutting-edge technology. This is driving rapid growth in the use of multi-AI agents.

- Efficiency

Multi-AI agents boost efficiency by delegating tasks to specialized agents, each tailored to handle specific responsibilities. This targeted approach speeds task completion and improved accuracy, while reducing bottlenecks and maximizing resource use—ultimately streamlining operations and enhancing productivity. - Flexibility

Multi-AI agents bring unmatched flexibility by functioning independently, allowing the system to quickly adapt to shifting needs and dynamic environments. This agility helps businesses stay ahead of market trends and swiftly respond to changing customer demands. - Scalability

The scalability of multi-AI agent systems is a key advantage. As demand rises, new agents can be seamlessly integrated into the system to manage higher workloads, ensuring smooth expansion without disrupting ongoing processes. This ability to scale effectively allows businesses to grow and handle increasing demand without losing efficiency.

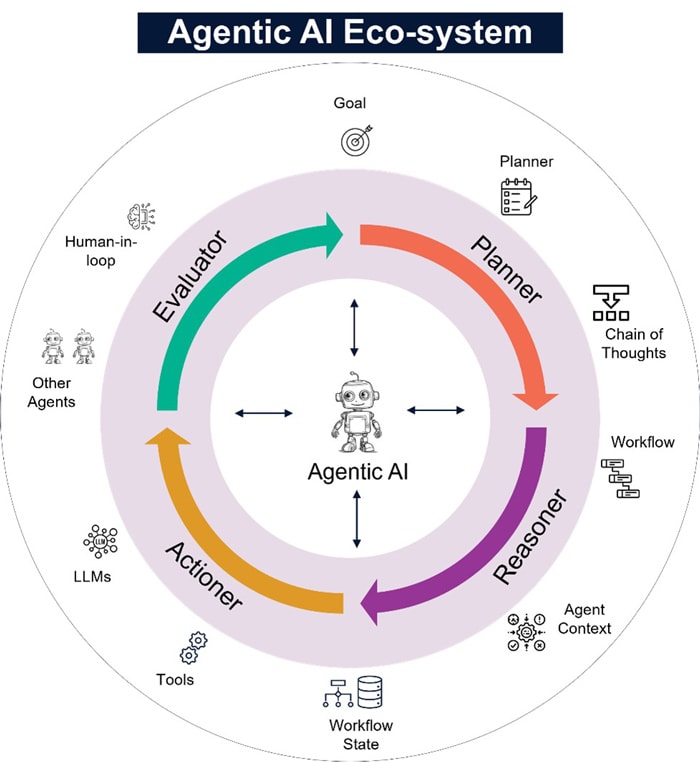

Key components of Agentic AI Eco-system

- Goal

Agentic AI operates with clearly defined objectives, either user-given or autonomously inferred. These goals act as the anchor for all planning, reasoning, and execution steps. Goals can be static or evolve over time based on context, user input, or environmental changes. - Planner

A Planner component decomposes high-level goals into structured steps, subgoals, or action sequences. It coordinates what needs to be done, when, and how — optimizing efficiency, feasibility, and alignment with the overall objective. - Chain of Thought

Agentic AI uses structured reasoning to simulate and justify decisions before taking action. This internal or externalized "thinking" process helps in selecting appropriate tools, resolving ambiguities, or evaluating alternative paths toward the goal. - Workflow

A workflow represents the agent’s procedural path — a series of orchestrated actions, decisions, and tool invocations — necessary to achieve the goal. It includes conditional logic, branching paths, retries, and step-by-step coordination. - Agent Context

The agent maintains a rich contextual understanding, including memory of prior actions, environment state, task constraints, user preferences, and its own capabilities. This context informs decision-making and allows the agent to act coherently across time. - Workflow State

This captures the current position within a workflow — what’s been completed, what’s in progress, what failed, and what remains. It enables recovery from interruptions, tracking dependencies, and maintaining continuity. - Tools

Agentic AI leverages external tools (e.g., APIs, databases, web interfaces, system commands) to per-form tasks beyond language reasoning. Tools are selected and invoked contextually to achieve specific subgoals. - LLMs

Large Language Models (LLMs) serve as the reasoning engine and interface — interpreting user input, generating plans, simulating outcomes, and deciding next steps. They may be queried multiple times dur-ing planning or execution. - Human in the Loop

While autonomous, Agentic AI often benefits from human feedback, approval, or override. Humans may intervene at critical decision points, provide additional context, or help refine goals — ensuring safety, alignment, or ethical constraints.

Characteristics of Agentic AI

Agentic AI systems are designed to operate autonomously, make informed decisions, and interact with their environment or users in a purposeful and goal-driven manner. These systems are composed of several key components that work together to enable intelligent behavior and adaptability. The primary components of Agentic AI include

- Cognitive Processing (Reasoning and Decision-Making)

This component handles the core functions of reasoning, learning, and decision-making. It processes the data gathered through perception, interprets it, and makes informed decisions. Cognitive processing en-compasses:- Inference and reasoning: Deriving conclusions based on available data.

- Planning: Formulating a sequence of actions to achieve predefined objectives.

- Learning: Continuously improving decision-making through feedback, either by reinforcement learn-ing or supervised learning.

- Action

The action component enables the AI to take specific steps based on the decisions it makes. Whether it's sending a command, generating a recommendation, or interacting physically with the environment, the action component drives the AI’s behavior to fulfill its goals. - Communication

Effective communication is essential for Agentic AI, especially when interacting with humans or other agents. This can involve verbal communication through natural language processing (NLP), visual communication through image interpretation or generation, or exchanging data via APIs and other interfaces. - Autonomy

Autonomy is a defining feature of Agentic AI. It allows the agent to operate independently, setting its own goals, making decisions, and taking actions without constant human oversight. Autonomy is crucial for enabling AI to function in dynamic, real-world environments where human intervention may not be feasible. - Memory

Memory allows the AI to store and recall past experiences, actions, and their outcomes. By maintaining a memory of previous interactions, the agent can refine its decision-making process over time, adapting based on lessons learned from earlier actions. - Context Awareness

Context awareness equips the agent with the ability to understand its environment, the specific task at hand, and the broader situation in which it operates. By recognizing the context—such as user preferences, market conditions, or external factors, the agent can make more relevant and effective decisions. - Ethical and Safety Mechanisms

Ethical and safety considerations are integral to Agentic AI to ensure responsible and transparent operation. These mechanisms guide the agent to make decisions that align with ethical standards and avoid harmful consequences, particularly in sensitive or high-risk domains. - Multi-Agent Coordination (if applicable)

In systems involving multiple agents, coordination becomes essential for achieving shared objectives and managing tasks effectively. This component involves communication and collaboration between agents, as well as resolving conflicts to ensure a harmonious and efficient operation. - Feedback Loops

Continuous feedback is essential for the AI to adjust its behavior and improve over time. Feedback loops enable the system to learn from interactions with the environment or users, refining its actions and enhancing performance through iterative adjustments

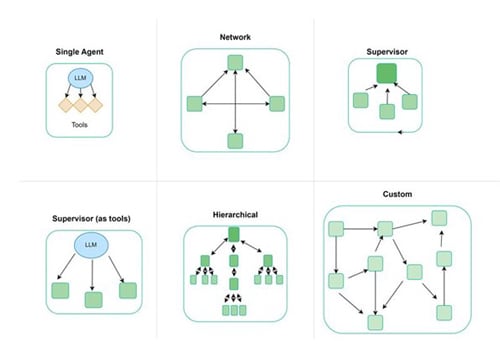

Agentic AI Architecture Patterns:

There are several ways to connect agents in a multi-agent system:

- Network: Each agent can communicate with every other agent. Any agent can decide which other agent to call next

- Supervisor: Each agent communicates with a single supervisor agent. Supervisor agent makes decisions on which agent should be called next.

- Supervisor (tool-calling): Special case of supervisor architecture. Individual agents can be represented as tools. Supervisor agent uses a tool-calling LLM to decide which of the agent tools to call and arguments to pass.

- Hierarchical: Multi-agent system with a supervisor of supervisors. This is a generalization of the supervisor architecture and allows for more complex control flows.

- Custom multi-agent workflow: Each agent communicates with only a subset of agents. Parts of the flow are deterministic, and only some agents can decide which other agents to call next.

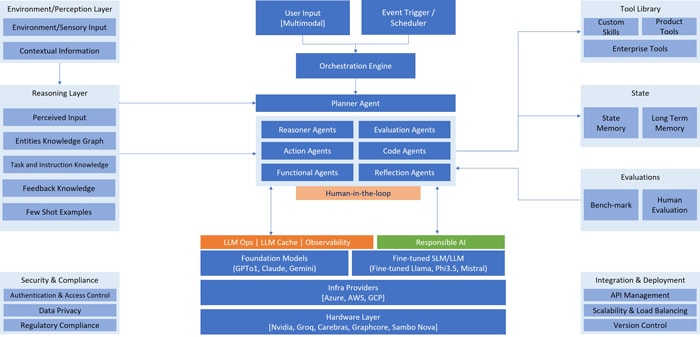

Agentic AI Reference Architecture:

The provided diagram outlines a sophisticated architectural framework for an Agentic AI system, designed for intelligent, autonomous, and adaptive behavior. This modular architecture integrates large language mod-els (LLMs) with traditional AI components, emphasizing operational efficiency and ethical considerations.

The system is structured into key layers:

- Environment and Perception Layer

Environment/Sensory Input & Contextual Information: Gathers and processes raw, multimodal data from the environment, enriched by relevant background knowledge for comprehensive understanding. - Reasoning Layer

Perceived Input, Entities Knowledge Graph, Task and Instruction Knowledge, Feedback Knowledge, Few Shot Examples: Transforms perceived data into actionable insights, leveraging structured knowledge, defined goals, past experiences, and illustrative examples to guide decision-making. - Agentic Core (Planner Agent and Sub-Agents)

- Orchestration Engine: Manages overall system flow and coordinates interactions.

- Planner Agent: Orchestrates the entire system, breaking down high-level goals into executable sub-tasks. It strategies the overall workflow and delegates to specialized agents.

- Reasoner Agents: Perform logical inference and problem-solving, analyzing information to draw conclusions. They help understand the current state and predict outcomes.

- Evaluation Agents: Assess the system's performance and the effectiveness of actions taken. They compare results against expectations, providing crucial feedback for improvement.

- Action Agents: Are the system's executors, translating plans into concrete operations. They directly interact with the external environment or initiate processes.

- Code Agents: Handle the generation, interpretation, and execution of code. They allow the system to interface with software, generate solutions programmatically, or adapt through self-modification.

- Functional Agents: Provide specific, encapsulated capabilities or services to the system. They perform specialized tasks efficiently when called upon by other agents.

- Reflection Agents: Engage in self-introspection, analyzing successes and failures to learn from experience. They identify areas for improvement and refine the system's strategies over time.

- Human-in-the-loop: Facilitates critical human oversight, intervention, and guidance for alignment and error correction.

- Tool Library

Custom Skills, Product Tools, Enterprise Tools: Provides access to diverse external capabilities, in-cluding specialized functionalities and integrations with commercial and internal enterprise systems. - State Management

State Memory & Long-Term Memory: Maintains the agent's short-term context for ongoing tasks and persistent long-term knowledge for continuous learning and retention. - Evaluations

Benchmark & Human Evaluation: Ensures reliability and performance validation through standard-ized tests and qualitative human assessments. - LLM Operations (LLM Ops & LLM Cache | Observability)

- Foundation Models & Fine-tuned SLM/LLM: Leverages general-purpose and specialized LLMs for linguistic and reasoning capabilities.

- LLM Cache | Observability & Responsible AI: Manages LLM efficiency and monitors interactions while integrating practices for fairness, transparency, and safety.

- Infrastructure and Deployment Layers

Infra Providers & Hardware Layer: Provides cloud computing and specialized hardware acceleration for AI workloads. - Security & Compliance

Authentication & Access Control, Data Privacy, Regulatory Compliance: Ensures secure, private, and legally compliant operation of the system. - Integration & Deployment

API Management, Scalability & Load Balancing, Version Control: Facilitates practical deployment, management, and scaling of the agentic system.

Factors to Consider Before Selecting the Right Agentic Framework

Choosing the optimal agentic framework for a specific use case is crucial for successful agent-based sys-tem development. This decision hinges on several critical factors, each with varying degrees of importance depending on the nature of the application.

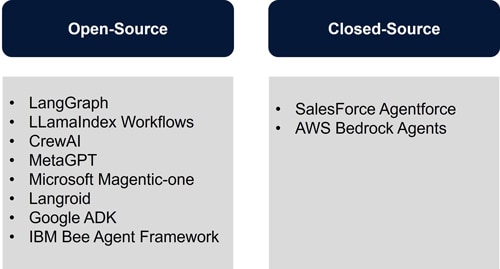

Agentic Frameworks considered for Evaluation

Below mentioned parameters help to select the right agentic framework.

Parameters for Core features

- Agent Modeling and Creation: The Agentic Framework should offer flexibility in defining agent behaviors, goals, and decision-making, along with support for planning and decomposing complex tasks into sub-tasks.

- Planning & Task Decomposition: The agentic framework should enable flexible planning of sub-tasks for complex inputs and support intelligent task delegation to the most suitable agents.

- Agent Hand-off: The agentic framework should support a flexible and seamless handoff of responsibilities between agents to complete the desired tasks.

- Context Passing: The agentic framework should allow flexible passing of contextual information to agents during invocation

- State Management: The agentic framework should effectively manage execution state and define its scope clearly.

- Recursion Control: The agentic framework should offer flexibility to prevent agents from entering uncontrolled execution loops.

- Human in the Loop (HITL) : The agentic framework should be capable of detecting when human intervention is needed and seamlessly handing over tasks, effectively integrating human intelligence into multi-agent AI systems.

Parameters for Technical Capabilities

- Extensibility: The agentic framework should be able to adapt, evolve, and integrate with other technologies and applications, including the creation of new agent types, customization of agents, and integration with external systems and services

- Scalability: The agentic framework should efficiently manage large numbers of agents and complex environments without notable performance degradation.

- Resiliency: The agentic framework should exhibit resilience to failures, disruptions, and unforeseen challenges, maintaining functionality and performance through error handling, recovery mechanisms, or agent redundancy for critical tasks.

- Portability: The agentic framework should enable the export and packaging of agents from a source environment and facilitate their import and deployment into a target environment using the same agentic framework.

- Security: The agentic framework should ensure the security and privacy of data and systems, especially when handling sensitive information. It should include built-in security features to protect data integrity and confidentiality.

- Observability: The agentic framework should allow tracking and understanding of an AI agent's decision-making process. It should include monitoring, logging agent actions, the data used, and the rationale behind its decisions, along with features for performance tracking and effective error handling.

- Agent Interoperability: The agentic framework should support the invocation of agentic components (e.g., agents, plug-ins, tools) from other agentic frameworks, ensuring seamless interoperability across platforms.

- Ease of Migration: The agentic framework should facilitate the migration of workflows and agents to other competing agentic frameworks with minimal effort and disruption.

- LLM Compatibility: The agentic framework should be compatible with large language models (LLMs) provided by various vendors and hyperscalers. It should clearly specify which LLMs are supported.

Parameters for Practical Consideration

- OOTB Agent Catalog: The agentic framework should offer a rich catalog of out-of-the-box agents, clearly indicating the domains or functional areas where they are most effective and applicable.

- Tech Stack: The agentic framework should clearly define its core technology components, including the programming languages, libraries, and tools used. It should also specify how developers can configure, extend, and integrate the framework using these technologies.

- Infra Capacity Requirement: The agentic framework should define the infrastructure requirements necessary to deploy and run use cases effectively on the platform.

- Framework Maturity: The agentic framework should demonstrate a high level of development and adoption, reflecting its stability, reliability, and real-world usage. Indicators include its release history, version stability, user base, and presence in production environments.

- Community and Support: The agentic framework should be supported by a strong, active community of developers and users, with comprehensive documentation, reliable support channels, and regular updates to ensure ongoing improvements.

- Agent Workflow Visualization: The agentic framework should provide effective tools or capabilities to generate visual representations of agent interactions and workflows—such as sequence diagrams or flowcharts (e.g., Mermaid diagrams)—directly from the code or configuration, aiding in better understanding, debugging, and documentation.

- License Type: The agentic framework should clearly state its licensing model, indicating whether it is open-source or closed-source, along with any usage restrictions, contribution guidelines, and implications for commercial or enterprise adoption.

Evaluation of Available Agentic Frameworks

This table compares various multi-agent frameworks, both open and closed-source, across several key pa-rameters. Each framework's capability for a given parameter is scored as:

- High – H (Green): Indicates strong performance and comprehensive satisfaction of the parameter.

- Medium – M (Yellow): Suggests moderate performance or partial satisfaction.

- Low – L (Red): Denotes limited or insufficient capability regarding the parameter.

Agentic AI Evaluation Matrix – Core Features

| Parameter Name | Lang-Graph | Llama Index Work-flows | Crew AI | Meta GPT | Langroid | Microsoft Magen-tic-one | IBM Bee Frame-work | AWS Bed-rock Agents | Google Agent Devel-opment Kit (ADK)r | Salesforce AgentForce |

|---|---|---|---|---|---|---|---|---|---|---|

| Agent Modeling and Creation | H | H | H | H | H | H | H | H | H | H |

| Planning & Task decomposition | L | H | M | M | L | L | L | M | H | H |

| Task Delegation | L | L | H | L | H | H | H | H | H | H |

| Agent hand-off | H | H | H | H | H | H | M | H | H | H |

| Context Passing | H | H | H | H | H | H | H | H | H | H |

| State Management | H | H | H | H | L | H | H | H | H | H |

| Recursion control | H | M | H | H | H | H | H | L | M | L |

| Human Agent Interaction (HITL) | H | H | H | H | H | H | L | H | M | H |

Evaluation deep-dive for various Agentic AI frameworks for the identified core parameters

1. Agent Modelling and Creation:

- LangGraph uses the Reason and Act approach with an intuitive syntax for defining agent workflows. It enables easy creation and management of reusable tools across agents and offers LangGraph Studio, a low-code/no-code platform for agent development and execution.

- LlamaIndex provides a simple Python syntax to structure workflows where agents listen for events and execute tasks upon triggers, defining the flow of execution. It is based on event-driven architecture.

- CrewAI offers a flexible and modular way to define agents with roles, goals, and backstories. Agents can be defined programmatically or with YAML files, providing full customization. Flows manage multiple crews, agents, and tasks, allowing dynamic agent collaboration. Agents use tools flexibly, working within crews or independently.

- Langroid simplifies agent creation through configuration and instantiation. Agents use tools or function calls to perform tasks, with parameters like LLM, prompts, output format, and human-in-the-loop support. LLM agents manage tool usage and response generation.

- Microsoft Magentic-One is a multi-agent system where an Orchestrator directs specialized agents to perform web and file-based tasks. It includes agents like WebSurfer, FileSurfer, Coder, and ComputerTerminal.

- IBM Bee Framework includes a single agent, BeeAgent, based on the Reason and Act approach. It offers a modular agent modeling system with a base class to create custom agents. Currently, it supports one agent with multiple tools to solve a problem.

- AWS Bedrock provides a user-friendly interface to design agents visually, with pre-built templates and the option to create custom agents via code and configuration. Agents use action groups to define tasks and connect to a knowledge base for enhanced responses.

- Agent Development Kit (ADK) makes it easy to create agents using simple Python/ java code by just passing parameters like model, name, description, and instructions. It also lets developers define agent behavior using instructions, tools, and callbacks.

- SalesForce Agentforce lets businesses create intelligent agents with defined behaviors and goals. Agents can be built using LCNC tools, such as Agent Builder, Prompt Builder, and Model Builder, with actions defined in NLP.

2. Task Delegation:

- LangGraph does not provide native task delegation but supports custom implementations using LLMs to identify and assign tasks to appropriate agents.

- LlamaIndex Workflows lacks built-in task delegation, though developers can implement LLM-driven custom solutions for dynamic task allocation.

- CrewAI includes a Manager agent that uses LLMs to delegate tasks dynamically to agents based on attributes like Name, Description, and Goal.

- MetaGPT does not inherently support task decomposition or delegation but allows implementation of a custom planner role using LLMs to assign subtasks.

- Langroid supports task delegation via a parameter enable_orchestration_tool_handling at the agent level, which enables automatic delegation to tools (enabled by default).

- Microsoft Magentic-One leverages an Orchestrator agent (e.g., LedgerOrchestrator) to direct tasks among predefined agents using round-robin or other strategies.

- IBM Bee Framework uses a built-in agent, BeeAgent, that internally delegates tasks to appropriate tools via LLM, though this functionality isn’t exposed for custom agents.

- AWS Bedrock Agents feature a supervisor agent that dynamically delegates subtasks to specialized agents, utilizing LLMs for efficient communication and routing to boost productivity and workflow clarity.

- Agent Development Kit (ADK) supports LLM-driven delegation, where tasks are analyzed, matched with the most appropriate agent, and dynamically routed through flexible mechanisms.

- Salesforce Agentforce incorporates the Atlas Reasoning Engine, which autonomously reasons for and delegates tasks to the best-suited agent based on task requirements and agent capabilities.

3. Planning and Task Decomposition:

- LangGraph lacks a built-in feature for breaking down complex problems into sub-tasks, but this can be handled via custom implementations using LLMs to create decomposition plans.

- LlamaIndex supports planning through its built-in structured planner agent, which can integrate LLMs like GPT-4 for effective task decomposition; however, GPT-3.5 shows inconsistency in generating reliable plans.

- CrewAI includes a planning feature activated with planning=True, which adds a step-by-step plan to task descriptions for improved execution. It suits conversational flow use cases but does not reorder tasks, which execute in their original sequence.

- Langroid does not have a built-in planner but allows custom agent implementations using LLMs to perform task decomposition and sequence execution.

- Microsoft Magentic-One similarly lacks a native planning feature but enables task breakdown through custom LLM-powered agent implementations.

- IBM Bee Framework does not offer a built-in planner but supports LLM-based custom planning strategies to decompose complex problems.

- AWS Bedrock employs chain-of-thought prompting with ReAct and introduces a supervisor agent to break down tasks into actionable steps, with support for supervisor mode and supervisor with routing mode for task collaboration.

- Agent Development Kit (ADK) uses the PlanReActPlanner to structure agent responses into phases: PLANNING, REPLANNING, REASONING, ACTION, and FINAL_ANSWER. This helps agents break down complex tasks and respond more effectively.

- Salesforce Agentforce provides task decomposition capabilities through its Atlas Reasoning Engine, which allows agents to reason and break tasks into manageable steps for specialized agents to execute

4. Agent hand-off:

- LangGraph offers a built-in workflow feature that dynamically adjusts based on the planner's output, with agent handoffs occurring according to the defined flow; however, once initiated, the workflow cannot be altered.

- LlamaIndex enables smooth agent handoffs through its event-driven model, where agents are triggered by specific events.

- CrewAI supports both static and dynamic task-to-agent linking. After a task is completed, the next agent is triggered, and context can be passed between tasks using the context attribute to facilitate handoffs.

- Langroid manages agent handoffs at the main task level, with each task tied to an agent and its tools. Sub-task transitions are controlled by the main task based on prompt configurations.

- Microsoft Magentic-One uses an orchestrator agent to handle task delegation and agent handoffs by assigning responsibilities to specialized agents like WebSurfer or Coder, while tracking progress and adapting as needed.

- IBM Bee Framework does not support direct agent handoffs but can sequence tool usage and execution order through the single BeeAgent based on the problem’s requirements.

- AWS Bedrock employs a hierarchical model where a supervisor agent delegates tasks to specialized agents, facilitating structured agent handoffs and improving coordination in complex workflows.

- Agent Development Kit (ADK) hands over the responsibility of a task to another agent using the transfer_to_agent function call. AutoFlow acts as an intermediary mechanism that intercepts this function call, determines the appropriate target agent, and modifies the execution context to ensure the task is correctly routed.

- Salesforce Agentforce enables intelligent agent handoffs using the Atlas Reasoning Engine, which autonomously orchestrates actions and ensures task transitions to the most suitable agent based on expertise.

5. Context Passing:

- LangGraph uses a state object to manage and maintain context across agents.

- LlamaIndex Workflows utilizes a context variable to handle context passing between agents.

- CrewAI provides a customizable state object for context flow. Within a crew, context is passed across tasks using the context attribute. Additionally, the Knowledge attribute enables context retention by referencing external information.

- Langroid uses a Message parameter for context passing across tasks.

- Microsoft Magentic-One maintains a chatHistory object to share context between agents.

- IBM Bee Framework offers a TokenMemory class to maintain and pass context across tools.

- AWS Bedrock Agents enable multi-agent collaboration by sharing conversation history, allowing the supervisor agent to pass full context to subagents, ensuring coherent interactions.

- Agent Development Kit (ADK) uses InvocationContext to manage task execution, carrying session data, agent info, user input, and a unique invocation ID. This context is automatically passed to agents and tools during runtime.

- Salesforce Agentforce transfers context via messaging session context variables (e.g., end-user contact ID), managed within AgentForce Builder under context settings.

6. State Management:

Different agent frameworks provide various methods for managing shared state and memory, essential for collaboration and task continuity among agents:

- LangGraph: Enables collaborative agent interactions through a shared state managed via Checkpoint, ensuring persistence within and across threads.

- LlamaIndex Workflows: Uses context objects for shared state and events (including custom ones) to facilitate inter-agent communication and data sharing.

- CrewAI: Offers multiple state management mechanisms:

- Flow State Management with Pydantic's BaseModel for structured updates,

- Crew-level Memory System for task continuity,

- Caching (default-enabled) for state retention across interactions.

- Langroid: Lacks built-in global state support but allows developers to implement custom state handling using standard Python tools.

- Microsoft Magentic-One: Employs an Orchestrator that creates plans and maintains a task ledger to track progress, dynamically reallocating tasks when progress halts.

- IBM Bee Framework: Provides flexible memory types—Unconstrained, Sliding, Token, and Summarize Memory—to help agents retain context and enhance response quality.

- AWS Bedrock Agents: Maintain memory across sessions for up to 30 days, enabling more consistent and personalized agent interactions.

- Agent Development Kit (ADK) manages execution state across agents and tools using Session State and Invocation Context. Session State acts as short-term memory for a session, while Invocation Context provides execution details like agent info, user input, and access to session data.

- Salesforce Agentforce: Relies on Flow and Apex for session-level state within interactions but requires custom setups for managing long-term memory across sessions.

7. Recursion Control:

- LangGraph: Limits recursion using the recursion_limit parameter (default: 25), which can be adjusted.

- LlamaIndex Workflows: Uses timeout to cap workflow duration; if unset (None), recursion may go unchecked, possibly canceling tasks. Requires StartEvent and StopEvent for validation.

- CrewAI: Controls recursion with max_iter (default: 25), max_rpm, max_execution_time, and max_retry_limit (default: 2) to prevent excessive retries.

- Langroid: Uses turns in task execution (e.g., turns=2) to limit recursion; missing or invalid values trigger InfiniteLoopException.

- Microsoft Magentic-One: Manages recursion via max_rounds (e.g., 30) and max_time to limit agent duration.

- IBM Bee Framework: Provides advanced controls including AbortSignal.timeout, maxRetriesPerStep, totalMaxRetries, and maxIterations.

- AWS Bedrock Agents: Employs “Return of Control” for recursion handling and uses AWS Lambda loop detection to avoid infinite loops.

- Agent Development Kit (ADK): No direct recursion parameters; control depends on prompt/workflow design.

- Salesforce Agentforce: Lacks built-in recursion control but supports custom workflows and validation rules to avoid infinite loops.

8. Human in the loop:

- LangGraph supports HITL workflows using interrupt_before and interrupt_after to pause execution and allow human input, with checkpoints to save graph state before intervention.

- LlamaIndex Workflows enable HITL through events, human prompts, and input-based decisions, with nested workflows for more complex use cases.

- CrewAI uses the human_input parameter in task definitions to prompt users before final answers for added context or validation.

- Langroid supports HITL by enabling the interactive parameter in tasks, allowing agents to request human feedback.

- Microsoft Magentic-One offers HITL via human_input_mode (hil_mode) and utilizes a User_proxy agent for interaction during execution.

- IBM Bee Framework lacks built-in HITL but allows human input via custom scripts at defined workflow points.

- AWS Bedrock Agents support HITL through a "return of control" mechanism, enabling human oversight at key stages.

- Agent Development Kit (ADK) supports Human-in-the-Loop (HITL) interactions, allowing agents to detect when human input is needed and incorporate it into the workflow.

- SalesForce Agentforce includes HITL interactions, letting agent bots escalate complex issues to human agents for resolution.

Agentic AI Evaluation Matrix – Technical Capabilities

| Parameter Name | Lang-Graph | Llama Index Work-flows | Crew AI | Meta GPT | Langroid | Microsoft Magen-tic-one | IBM Bee Frame-work | AWS Bedrock Agents | Google Agent Devel-opment Kit (ADK) | Salesforce AgentForce |

|---|---|---|---|---|---|---|---|---|---|---|

| Extensibility | H | H | H | H | H | M | H | H | H | H |

| Performance | H | H | H | M | H | H | H | H | H | H |

| Scalability | H | H | H | H | H | H | H | H | H | H |

| Resiliency | H | H | M | H | H | H | L | H | L | H |

| Portability | L | L | L | L | L | L | L | L | M | L |

| Security | L | L | L | L | L | M | L | H | H | H |

| Ease of Migration | L | L | L | L | L | L | L | L | H | L |

| LLM Compatibility | H | H | H | H | H | M | M | H | H | M |

Evaluation justification for various Agentic Ai frameworks for the identified Tech-nical parameters

1. Extensibility:

- All frameworks emphasize extensibility through custom tools, integrations, and adaptability to varied requirements.

- LangGraph, LlamaIndex, and IBM Bee Framework provide high flexibility for defining custom tools and enabling agent interaction with external systems.

- CrewAI allows dynamic association of custom tools with agents or tasks and includes built-in features like RAG search and code interpretation.

- Langroid supports tool creation using standard Python functions, which can be integrated through a simple messaging interface.

- AWS Bedrock Agents stand out with capabilities to define new agent types, customize behaviors, and integrate using standardized interfaces and AWS-native tooling for scalable growth.

- Agent Development Kit (ADK) supports extensibility by allowing developers to create custom agent types, build multi-agent systems, and integrate with external tools like FunctionTool, OpenAPITool, and Google Cloud Tools. Agent behavior can be refined over time using customizable callbacks.

- Salesforce Agentforce emphasizes integration within the Salesforce ecosystem via APIs, MuleSoft, and pre-built connectors.

- Microsoft MagenticOne, still in early development and not available on PyPI, currently lacks formal support for agent customization and integration, making it less extensible compared to others.

2. Performance:

- Many agent frameworks enhance performance using asynchronous processing, parallel execution, and scalable infrastructure.

- LangGraph, Langroid, and LlamaIndex utilize Python’s async programming to enable parallel task execution, sustaining performance as applications grow.

- CrewAI supports asynchronous workflows with methods like kickoff_async() and kickoff_for_each_async(), enabling concurrent task execution. Its @start() decorator also facilitates parallel execution during flow initiation.

- IBM Bee Framework uses TypeScript's async capabilities to support parallelism at scale.

- Microsoft Magentic-One supports asynchronous execution but may see performance variance due to dependencies like Bing for web browsing.

- AWS Bedrock Agents deliver scalable performance via serverless architecture powered by AWS Lambda, offering automatic resource scaling. ADK achieves strong performance through key architectural choices such as an asynchronous execution model, efficient state management, and a built-in evaluation framework.

- Salesforce Agentforce boosts performance through deep integration within the Salesforce ecosystem, reusable built-in actions, and an infrastructure that scales with demand.

3. Scalability:

- LangGraph is built for scalability with a modular agent architecture, asynchronous execution, distributed deployment, and efficient memory management, enabling parallel processing and optimal resource use across large datasets and complex models.

- LlamaIndex Workflows scale using an event-driven design and simple syntax for defining agents and events, efficiently managing large numbers of agents for extensive applications.

- CrewAI supports scalability with asynchronous execution, dynamic task allocation, and customizable manager agents, enabling seamless system growth and efficient resource use.

- Langroid scales via independent task-based agents, dynamic orchestration, and modular tool integration, supporting parallel execution and adaptability to complex tasks.

- Microsoft Magentic-One handles scalable, event-driven tasks like web browsing and code generation but currently relies on fixed, predefined agents with no custom agent support.

- IBM Bee Framework ensures scalability with asynchronous parallel processing, modular architecture, and customizable tools, making it suitable for growing and complex workflows.

- AWS Bedrock Agents use AWS Lambda’s serverless architecture to scale automatically, adjusting resources to match real-time demand.

- Agent Development Kit (ADK) modular architecture, dynamic agent management, flexible execution models, resource monitoring, and deployment options together enable efficient handling of large-scale agent systems and complex environments, making it a powerful framework for scalable AI agents.

- Salesforce Agentforce, powered by Salesforce Cloud, scales efficiently to support millions of users and enterprise-grade complexity, with performance managed through appropriate subscription tiers.

4. Resiliency:

- LangGraph enhances fault tolerance through checkpointing, enabling node failure recovery by resuming from the last successful super step while avoiding redundant computations with preserved checkpoint data.

- LlamaIndex Workflows provide a fault-tolerant, event-driven architecture with built-in retry mechanisms and failure handling for scalable and reliable GenAI system deployment.

- CrewAI focuses on task and tool execution resiliency, offering automatic task retries, tool error handling, context window management, task delegation, and timeout handling, though it lacks built-in agent-level failover.

- Langroid supports fault tolerance via dynamic error handling, task delegation, and multi-agent collaboration to ensure smooth execution and recovery from failures.

- Microsoft Magentic-One uses an Orchestrator and a Progress Ledger to monitor tasks and assign subtasks if incomplete, improving system resilience.

- IBM Bee Framework does not offer native agent failover or tool replacement, requiring developers to implement custom fault-tolerance mechanisms.

- AWS Bedrock Agents rely on infrastructure’s best practices such as load balancing, error handling, and monitoring but lack built-in agent-level failover. ADK includes error handling, recovery, monitoring, and alerting features, but some users have reported challenges with managing tool failures, indicating that its resiliency is currently limited.

- Salesforce Agentforce ensures resilience by automatically rerouting tasks or escalating to human agents, maintaining workflow continuity and redundancy.

5. Portability

- Most agent frameworks lack native, built-in portability features.

- However, portability can be achieved through custom implementation, primarily by containerizing the Agentic Framework and its dependencies.

- This method involves creating a container image (e.g., using Docker) and deploying it in a containerized environment, ensuring the framework runs consistently across different platforms.

- Containerization allows for environment-agnostic deployment, improves flexibility, and reduces the need to alter the underlying code when moving between systems.

- Agent Development Kit (ADK) agents can be containerized with Docker to package all dependencies and configurations, and deployed across platforms like Kubernetes, Google Cloud Run, AWS ECS, and Azure Container Apps.

6. Security:

- Agent Development Kit (ADK) includes identity and authorization controls, safe execution guardrails, sandboxed code execution, behavior monitoring, audit logs, and network-level security measures, while also leveraging security features inherited from GCP.

- Salesforce Agentforce ensures secure interactions with encryption, access controls, toxicity detection, and prebuilt actions designed for secure customer engagement.

- AWS Bedrock includes comprehensive security through data encryption, role-based access control, compliance with industry standards, and the use of Amazon Bedrock Guardrails for added protection.

- Other frameworks generally lack native security features and require custom implementations, which should focus on data protection, access control, regular updates, data minimization, privacy-preserving techniques, and transparency in data use.

7. Ease of Migration:

- None of the current frameworks offer a specific, built-in migration path for transferring Flows or Agents between systems.

- However, general migration guidelines can be applied to support transitions within multi-agent environments.

- This typically involves abstracting logic, standardizing agent interfaces, isolating tool dependencies, and using containerization or API-based integration to reimplement or port agents across different frameworks with minimal disruption.

- Agent Development Kit (ADK) enables developers to seamlessly transition agents and workflows to other agentic frameworks with minimal friction, supported by A2A and MCP tools.

8. LLM Compatibility:

- LangGraph, LlamaIndex Workflows, CrewAI, Langroid, AWS Bedrock Agents offer broad compatibil-ity, supporting all types of LLMs.

- ADK integrates models mainly via two methods:

- Direct String/Registry: For tightly integrated Google Cloud models (e.g., Gemini via AI Studio or Vertex AI), you provide the model name or endpoint string to LlmAgent. ADK’s registry then maps it to the correct backend using the google-genai library.

- Wrapper Classes: For broader compatibility, including non-Google models or those needing special configurations (e.g., LiteLLM), you create a wrapper class instance and pass it to LlmAgent as the model.

- Microsoft Magentic-One is currently limited to OpenAI’s GPT-4o, restricting model flexibility.

- IBM Bee Framework includes built-in adapters for OpenAI, Ollama, WatsonX, and Grog, and users can request additional inference providers. If approved, Bee either builds the adapter or allows users to contribute one via its Git repository.

- Salesforce Agentforce primarily supports its proprietary models but offers a low-code Model Builder that lets users register, test, and deploy custom AI models and LLMs.

Agentic AI Evaluation Matrix – Practical Considerations

| Parameter Name | Lang-Grap | Llama Index Work-flows | Crew AI | Meta GPT | Langroid | Microsoft Magentic-One | IBM Bee Frame-work | AWS Bedrock Agents | Google Agent Devel-opment Kit (ADK) | Salesforce AgentForce |

|---|---|---|---|---|---|---|---|---|---|---|

| OOTB Agent catalog | L | L | H | L | L | H | H | H | H | H |

| Tech stack | H | H | H | H | H | H | H | H | H | H |

| Infra capability requirement | H | H | H | H | H | H | H | H | H | H |

| Framework Maturity | M | M | M | M | M | M | M | H | H | M |

| Community Support | H | H | H | H | H | H | H | H | H | H |

| Agent Workflow visualization | H | H | H | H | H | L | L | L | H | H |

| License Type | H | H | H | H | H | H | H | M | H | M |

Evaluation deep-dive for various Agentic AI frameworks for the identified Practical parameters

1. OOTB agent Catalog:

- LangGraph, Langroid, and LlamaIndex Workflows share a similar approach, as none of them provide out-of-the-box (OOTB) default agents. Instead, they offer flexible frameworks that empower users to define and create their own agents with specific instructions and capabilities.

- MetaGPT offers a limited set of built-in agents tailored to SDLC (Software Development Life Cycle) roles such as product manager, architect, developer, and QA engineer. It also supports user-defined custom agents.

- CrewAI does not provide default agents but has a wide community-driven use-case base across domains like Customer Service, Marketing, and Fintech. It includes a rich library of built-in tools that can be leveraged for building agents.

- Microsoft Magentic-One includes specialized agents such as Orchestrator, FileSurfer, Coder, and ComputerTerminal, designed specifically for handling open-ended tasks involving web and file-based operations.

- IBM Bee Framework provides a built-in BeeAgent that follows a Reason-and-Act paradigm. This agent dynamically selects tools in collaboration with LLM to solve problems.

- Amazon Bedrock Agents come with OOTB agent blueprints and code samples, offering pre-configured templates that accelerate development by embedding best practices and optimized configurations.

- Agent Development Kit (ADK) provides a comprehensive Out-of-the-Box (OOTB) Agent Catalog, offering developers a wide range of pre-built agents for various domains.

- Salesforce Agentforce delivers a pre-built catalog of agents tailored to common business use cases in Sales, Service, Marketing, and Commerce.

2. Technology Stack:

- LangGraph and LlamaIndex Workflows support both Python and JavaScript, offering flexibility for developers working in either language.

- Langroid and CrewAI are primarily Python-based, with CrewAI also utilizing YAML for configurations.

- MetaGPT requires Python 3.9+ and supports Node.js or Python for rendering visualizations using the Mermaid engine.

- Microsoft Magentic-One supports both Python (version >= 3.8 and < 3.13) and .NET, providing options for developers on either stack.

- IBM Bee Framework is built using TypeScript and JavaScript, aligning with modern front-end and backend development practices.

- AWS Bedrock Agents offer broad multi-language SDK support, including Java, JavaScript, Python, CLI, .NET, Ruby, PHP, Go, and C++. These SDKs enable integration across a variety of platforms, and Amazon provides extensive language-specific code examples. ADK supports implementation in both Python and Java.

- Salesforce Agentforce is built on Salesforce's native technologies, including Apex, Flows, Einstein, Lightning, and Salesforce Data Cloud, emphasizing seamless integration within the Salesforce ecosystem.

3. Infra Capacity Requirement

- LangGraph and Langroid do not come with a pre-packaged deployment stack. Both frameworks allow flexibility in deployment, supporting a variety of tools and stacks based on the team's preferences. Common options include AWS, Google Cloud Platform (GCP), Azure, Docker, Kubernetes, and Jenkins. They generally require a small to medium-size runtime based on the complexity of the use case, with infrastructure capacity to either host LLMs locally or use cloud-hosted LLMs.

- LlamaIndex Workflows uses LlamaDeploy for deploying and scaling workflows as multi-service systems. Deployment is done via the llamadeploy and llamactl CLI, with YAML configuration files. LlamaCloud, a cloud-based service for document indexing and searching, is currently in private alpha. It requires minimal infrastructure for small to medium-size runtimes.

- CrewAI can be deployed on self-hosted infrastructure or through preferred cloud services. Like LangGraph and Langroid, it needs a small to medium-size runtime and the ability to host LLMs locally or leverage cloud-hosted LLMs based on the business case.

- MetaGPT requires a small to medium-size runtime depending on the business use case. It integrates with both open-source and closed-source models, and the infrastructure should be capable of hosting models if integrating open-source ones.

- Microsoft Magentic-One requires a small to medium-size runtime and the installation of Docker for code execution and running examples in a containerized environment.

- IBM Bee Framework can be deployed with a small to medium-size runtime, with no specific infrastructure demands other than what is needed for the use case.

- AWS Bedrock Agent is a fully managed service, meaning there are no specific infrastructure requirements for customers. AWS handles the underlying infrastructure, scaling, and service management. Users only need an AWS account with the necessary permissions.

- System requirements for ADK vary based on scale, complexity, and deployment environment. For local development, recommended specs include a modern multi-core CPU, at least 8 GB RAM, SSD storage, Python 3.9+, Docker (optional), and Google Cloud SDK for deployment.

- Salesforce Agentforce is a cloud-native platform that requires access to Salesforce Cloud infrastructure and cannot be deployed outside of the Salesforce ecosystem.

4. Framework Maturity:

- LangGraph, LlamaIndex Workflows, CrewAI, MetaGPT, Langroid, Microsoft Magentic-One, IBM Bee Framework is in the early stages of maturity, having been introduced between early 2023 and late 2024. These frameworks are actively evolving, with regular updates and growing community engagement:

- LangGraph (launched Jan 2023) is a low-level orchestration framework with version 0.2.48 as of Nov 14, 2024.

- LlamaIndex Workflows is in beta (v0.11.22 as of Nov 6, 2024) yet has shown fast-paced development and traction.

- CrewAI is gaining industry credibility with over 100 million agent executions and endorsements from major firms like Oracle and PwC, with version 0.80 as of Nov 13, 2024.

- MetaGPT, Langroid, IBM Bee Framework, and Microsoft Magentic-One are all in early development stages with ongoing feature expansion.

- AWS Bedrock Agents have reached a fair stage of maturity. Initially launched in preview in April 2023 and made generally available (GA) in November 2023, the platform benefits from AWS’s enterprise-grade infrastructure and has matured through several iterations.

- Salesforce Agentforce is relatively new but backed by Salesforce's established cloud ecosystem and enterprise reputation. Despite its youth, its adoption is expected to be strong, especially among existing Salesforce customers.

- Agent Development Kit (ADK) was launched in April 2025 and, while already mature, it continues to evolve with new features and ongoing improvements.

5. Community Support:

- LangGraph offers comprehensive documentation and is supported by the LangChain ecosystem, ensuring a rich community experience. It has 3486 contributors and is used by 189K users.

- LlamaIndex Workflows also provides comprehensive documentation and has a strong community. With over 20K community members, 1.3K contributors, and 2.8M monthly downloads, it is highly popular and actively used. The framework has seen 13K+ applications built on it, showcasing its widespread adoption.

- CrewAI benefits from comprehensive documentation, though its community is still growing. It has a large GitHub presence with 20K+ stars but has a relatively small active user base, with 2.3K posts and 30 daily active users. Despite this, there are other repositories and solutions that support additional features, such as GUIs and monitoring. GitHub stats show it is used by 9.5K users with 214 contributors.

- MetaGPT offers comprehensive documentation and has a growing community, with 113 contributors actively helping to improve the framework.

- Langroid has good community engagement on GitHub, with discussions and collaborations happening regularly. It has 37 contributors, indicating that its community is still in its early phases.

- Microsoft Magentic-One is supported by extensive GitHub documentation and has a growing community, though still in the early stages. With 35.5K stars and 460 contributors, it has gained significant traction but has a smaller active community compared to older frameworks. It is used by 2.8K users.

- IBM Bee Framework offers documentation on GitHub, but the community support is still developing as the framework is relatively new. It has 32 contributors and is growing.

- AWS Bedrock Agent enjoys comprehensive documentation and rich community support through various AWS resources. It benefits from AWS Developer Forums, AWS Partner Network (APN), and AWS blogs, making it well-supported within the AWS ecosystem.

- Yes, Google ADK provides strong community engagement, detailed documentation, and dependable support channels, creating a collaborative and developer-friendly ecosystem.

- Salesforce Agentforce benefits from Salesforce’s large, active community, with extensive documentation and professional support options. The Salesforce Developer Community and Trailhead provide a wealth of learning resources, making it well-supported.

6. Agent Workflow visualization:

- LangGraph: While it doesn’t have a built-in visualizer, LangGraph supports creating custom visualizations using third-party libraries like Graphviz or NetworkX. These visualizations can be compatible with Mermaid syntax for workflow depiction, allowing both graphical images and Mermaid code generation.

- LlamaIndex Workflows: This framework supports built-in agent workflow visualization with a tool called draw_all_possible_flows, which generates an interactive HTML file that can be opened in a browser to visualize workflows.

- CrewAI: Offers comprehensive workflow visualization through:

- Plot() Method: You can generate interactive HTML plots directly from a flow instance.

- CLI Support: Structured CrewAI projects can be visualized using the command line.

- Third-Party Tools: External tools like CrewAI-GUI and CrewAI-Studio provide a node-based, no-code interface for creating AI workflows with visual representation.

- MetaGPT: Does not have built-in agent workflow visualization. It integrates with Mermaid Engine for competitive_analysis diagrams, allowing visualizations based on the roles and their interactions, but it does not visualize agent workflows directly.

- Langroid: Features basic workflow visualization where the flow of agent invocation is shown through indentation, making it easy to track which agent is started or ended. However, it is a textual representation rather than graphical.

- Microsoft Magentic-One: Does not support agent workflow visualization, and no built-in visual tools are available for this framework.

- IBM Bee Framework: Lacks built-in workflow visualization features. Custom visualizations can be created manually by analyzing the interactions between Bee agents and tools.

- AWS Bedrock Agent: Does not automatically generate visual diagrams but offers tracing features and a trace and debug console for manual analysis of multi-agent interactions.

- Yes, ADK includes features for workflow visualization. Its Development UI offers a web-based interface for testing and debugging agents, featuring an event graph that visualizes agent interactions and event flows to help understand complex workflows.

- Salesforce Agentforce: Supports workflow visualization through Flow Builder, allowing for a visual representation of agent workflows, though it doesn't support direct Mermaid diagram generation from the code.

7. License Type:

- Lang Graph, LlamaIndex Workflows, CrewAI, MetaGPT, Langroid, and Microsoft Magentic-One are all open-source with MIT licenses. LangGraph and LlamaIndex also offer enterprise versions with commercial licenses, while CrewAI has both open-source and enterprise license options.

- IBM Bee Framework, Agent Development Kit (ADK) uses the Apache 2.0 License.

- AWS Bedrock Agent is proprietary with pay-per-use pricing models, with AWS being fully managed

- Salesforce AgentForce is proprietary, requiring a Salesforce Service Cloud license and a conversation-based pricing model.

Challenges and Considerations in Implementing Agentic AI

Implementing Agentic AI comes with a unique set of technological, operational, and organizational challeng-es that need to be addressed for successful integration. These challenges vary based on the industry and the complexity of the AI application. Below are some of the major technical and operational hurdles:

- Real-Time Processing Requirements: Many Agentic AI applications, such as autonomous systems or real-time decision-making systems, require processing vast amounts of data in real time. This poses a challenge in terms of the hardware, computational power, and software optimization needed to handle the scale and speed of data processing.

- Scalability Issues: While a proof-of-concept or small-scale pilot may work well, scaling Agentic AI to a larger operational level can be difficult. Operational scalability often involves addressing issues such as distributed computing, load balancing, and efficient resource management, all of which can create significant technical barriers.

- Interoperability: Agentic AI needs to integrate seamlessly with existing systems and infrastructure, which may involve multiple different technologies, platforms, and data standards. Ensuring interoperability between diverse systems without creating conflicts or inefficiencies remains a critical challenge.

- Security and Privacy Concerns: With AI systems taking more autonomous decisions, ensuring their security against adversarial attacks is crucial. Additionally, AI applications that process sensitive data, such as in healthcare or finance, need to adhere to strict data privacy regulations, which can increase the complexity of implementing Agentic AI systems.

- Skill Gaps and Talent Shortage: Developing and managing Agentic AI systems requires expertise in AI, machine learning, data science, and domain-specific knowledge. Many organizations face difficulties in attracting and retaining skilled talent. Additionally, existing teams may require reskilling or upskilling, which requires investment in training programs.

- Ethical and Regulatory Compliance: Ensuring that Agentic AI systems operate ethically and comply with regulations is a key consideration. This includes concerns around accountability (who is responsible for decisions made by AI?), bias in AI models, and the transparency of decision-making processes. Organizations must navigate the evolving regulatory landscape and develop frameworks for ethical AI governance.

- Cost of Implementation: The development, integration, and maintenance of Agentic AI systems can be costly, especially for small and medium-sized enterprises. While Agentic AI can lead to significant long-term cost savings, the upfront investment in technology infrastructure, talent, and research and development can be a deterrent.

Role of MCP (Model Context Protocol) in Agentic AI

Agentic AI envisions autonomous agents that don’t just generate text or answers but actively perform com-plex tasks and interact dynamically with the real world. The key challenge has been enabling these AI agents to seamlessly connect with and control a wide variety of tools, services, and data sources without the bottle-neck of custom, one-off integrations for every new capability.

That’s where the Model Context Protocol comes in. MCP is an open, standardized communication protocol designed specifically to empower agentic AI by creating a universal, plug-and-play interface between AI agents and the external world.

How MCP Enables Agentic AI Agents to Act

1. Unified, Standardized Tool Integration

- Traditionally, connecting AI agents to tools requires building custom APIs and glue code for each integration, which is costly, slow, and fragile.

- MCP replaces this patchwork with a single protocol that AI agents use to communicate with any MCP-compatible tool, regardless of whether it’s local or cloud-based.

- This enables agents to perform useful, multi-step workflows autonomously, such as retrieving data, managing files, executing code, or interacting with web services—transforming AI from passive responders into active doers.

2. Client-Server Architecture

- MCP uses a clean client-server model:

- MCP Clients live inside AI agents and handle all communication.

- MCP Servers act as intelligent adapters for tools, translating AI requests into actiona-ble commands and returning structured results.

- This architecture abstracts away the complexity of individual tools, allowing AI to discover tool capabilities, invoke commands, and handle errors gracefully.

3. Real-Time, Flexible, and Scalable Communication

- MCP works over both local connections (e.g., AI to a desktop app) and internet-based communication (e.g., AI to SaaS APIs).

- It uses structured, human-readable formats like JSON, enabling consistency, error resistance, and easy extensibility.

- AI agents can dynamically chain multiple MCP servers together in complex task workflows without prior knowledge or manual coding.

4. Unlocking Agent Autonomy and Practical Utility

- By removing the need for manual integration work, MCP lets AI agents autonomously explore and use new tools on the fly.

- This means agents can not only understand but actually execute meaningful tasks—saving summaries to files, querying databases, coding across multiple files, or controlling cloud services.

- MCP thereby unlocks the practical potential of agentic AI as a technology that actually “does” things in the world, rather than just talking about them.

5. Growing Ecosystem and Marketplaces

- MCP is rapidly evolving from a technical protocol into a thriving ecosystem with marketplaces offering pre-built MCP servers for popular tools like GitHub, Notion, and Figma.

- This marketplace model accelerates adoption and innovation, making it easier than ever to build and deploy agentic AI solutions that connect to real-world apps and data.

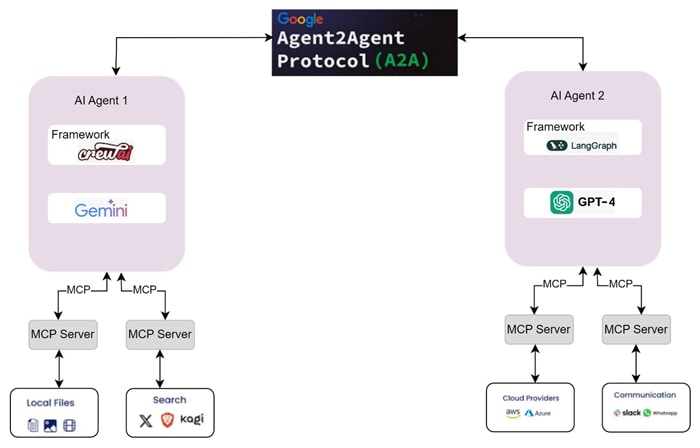

Role of A2A (Agent to Agent Protocol) in Agentic AI

With the rise of agentic AI, AI systems can autonomously plan, reason, and collaborate demands more than just powerful individual agents. It requires a standardized, secure, and scalable communication framework that lets agents interact, delegate tasks, and coordinate efforts across heterogeneous environments. This is precisely where Google’s Agent2Agent (A2A) protocol plays a pivotal role.

Solving Fragmentation in the Agent Ecosystem

AI agents are becoming more specialized. one might be great at research, another at booking flights, and another at analyzing data. While each can work well on its own, real value often comes from getting them to work together. But without a shared way to communicate, connecting them means writing custom code for every new pairing.

The A2A protocol solves this problem by serving as a universal communication layer for AI agents, similar to how HTTP connects websites. It gives agents a standard way to talk to each other, no matter who built them or how they work, making true collaboration across systems possible.

Key Contributions of A2A to Agentic AI

- Enabling Agent Collaboration

- A2A empowers agents to work together toward shared goals. It formalizes agent-to-agent communication using structured messages and well-defined task lifecycles. Agents can negotiate roles, delegate subtasks, and synchronize progress without custom integrations.

- Example: A personal assistant agent can coordinate with a travel planner, flight booker, and hotel recommender to deliver a complete itinerary by communicating through A2A.

- Supporting Specialization Through Capability Discovery

- With Agent Cards JSON-based resumes, describing an agent’s skills, endpoints, and requirements.A2A allows client agents to discover the right agent for the right task. This mirrors how modern software systems use APIs to discover and invoke services dynamically.

- Agents become plug-and-play components in a larger cognitive workflow.

- Secure and Opaque Agent Interactions

- A2A enables agents to interact securely without revealing their internal memory, tools, or proprietary logic. This approach preserves intellectual property, allows agents to be swapped as long as they offer the same capabilities, and supports secure integration of third-party agents. By keeping internal details hidden, A2A ensures both privacy and broad collaboration.

- Facilitating Multi-Turn, Multi-Agent Interactions

- By supporting structured messaging, A2A enables multi-turn conversations across agents. Through content-rich messages (including text, files, forms, etc.), agents can ask follow-up questions, clarify instructions, and return results in a negotiated format suited to the recipient's capabilities.

Below diagram shows a comparative view of A2A and MCP

Conclusion

This evaluation of leading agentic frameworks LangGraph, LlamaIndex Workflows, CrewAI, MetaGPT, Langroid, Microsoft Magentic-One,IBM BeeFramework, AWS Bedrock Agent, Google Agent developmentKit, and Salesorce Agentforce provides a comprehensive comparison across core functionalities, technical capabilities, and practical considerations. Our findings reveal that no single framework universally excels in all dimensions; rather, the optimal choice depends on the specific context of industry requirements, use case complexity, deployment timelines, and organizational constraints.

For enterprises aiming for rapid implementation and seeking to leverage established cloud ecosystems, agentic AI frameworks from leading providers such as AWS Bedrock Agent offer mature platforms with strong cloud integration and broad community support, making them well-suited for industries like finance, retail, and cloud-native technology firms.

For SDLC, software engineering related use cases, MetaGPT excels with its multi-persona agent architec-ture, enabling agents to simulate specialized roles like product manager, architect, or QA engineer across the software development lifecycle. This makes it well-suited for tech-centric domains such as software ser-vices, product engineering, and DevOps, where structured collaboration and agile-aligned workflows are essential.

In domains demanding high customization and extensibility, such as research-driven or specialized manufac-turing sectors, open and flexible frameworks like LangGraph and LlamaIndex Workflow for event driven tasks. Their superior agent modeling capabilities, along with strong support for LLM compatibility enable so-phisticated workflows tailored to complex scenarios involving recursive task decomposition and effective human-agent collaboration.

Google Agent Development Kit (ADK) is a code-first, modular framework designed for building complex, production-ready multi-agent systems. It offers orchestration primitives (e.g., SequentialAgent, LoopAgent), strong support for observability and monitoring. With built-in connectors for tools like web search and code execution, model-agnostic LLM support, and evaluation capabilities, ADK is well-suited for enterprise use cases in regulated industries such as healthcare and finance. While still maturing, its enterprise-grade infra-structure and cloud-native design make it a robust option for scalable, secure deployments.

For use cases centered around sales automation and customer engagement, SalesForce Agentforce pro-vides an out-of-the-box agent catalog and seamless integration with CRM platforms, enabling businesses to accelerate adoption with minimal overhead.

The evaluation also highlights that frameworks vary significantly in terms of technical maturity and ecosys-tem support. Early-stage frameworks such as CrewAI and IBM Bee Framework, Langroid exhibit promising innovation but may require longer ramp-up times and more in-house expertise to achieve production readi-ness.

Ultimately, organizations should weigh their priorities across time-to-market, complexity tolerance, infrastruc-ture capacity, and licensing constraints when selecting an agentic framework. We recommend conducting pilot projects to validate framework suitability in context-specific environments before full-scale adoption.

This white paper aims to guide decision-makers in aligning agentic framework capabilities with strategic ob-jectives, facilitating informed investments in AI-driven automation tailored to industry and operational needs.

References

- https://www.talentica.com/blogs/multi-ai-agent/

- https://langchain-ai.github.io/langgraph/tutorials/

- https://microsoft.github.io/autogen/dev//user-guide/autogenstudio-user-guide/index.html

- https://aisera.com/blog/multi-agent-ai-system/

- Aws Bedrock agentic framework reference for agentic framework

- https://towardsdatascience.com/choosing-between-llm-agent-frameworks-69019493b259

- https://google.github.io/A2A/

- MCP official documentation from anthropic

- Git Repository: - link to Git repository, where most of the POCs related source code is stored

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!