Artificial Intelligence

Building Software with Agents – A Solution View

Software development is an art where engineers create components using logic and imagination, now enhanced by AI. AI won't replace human creativity but can assist in solving complex problems quickly. Human feedback is crucial in an AI-driven development era due to the complexity of most enterprise software systems. While AI can automate many daily tasks, human intervention remains necessary for managing intricate architecture.

This paper tries to explain how AI based Software development enables engineers to ship software faster with good quality as well as to redefine software itself as an integrated system of components which leverage AI to fulfill its functionality. This paper tries to define key architectural components required to build an Agentic AI based software system.

Insights

- How to build Agentic AI based software solutions.

- Tools and Technologies which can be leveraged to build Agentic AI systems.

- Lessons learned and Practical Implications.

- How to Monitor Agentic AI Systems.

- As part of AI COE, we have tried to build an Agentic based software development model, and the key findings are explained in this paper.

- A reference Architecture for Agentic AI solutions.

Introduction

The Emergence of AI mandates organizations to shift software development in an AI enabled manner. This does not mean to just use AI tools like GitHub Copilot to build software, but AI as a core part of the software architecture. All software solutions are a workflow with different actors; For e.g., in simple applications, it might be UI, API and DB and complex applications have more components including third party services, or even human components.

For example, an ecommerce platform might include key components such as product search, shopping cart, checkout, and order management. Traditionally, these systems have been programmed to perform specific functions. However, in the era of artificial intelligence, this approach may change. Systems and services could be designed as intelligent agents that collaborate to provide users with a more streamlined experience. In complex systems like ecommerce applications, workflows can be created where different components perform specific tasks and interact to produce an outcome. AI can be utilized to automate a significant portion of these workflow processes.

AI will not replace everything; rather, it will handle certain aspects of the workflow and enhance the traditional logic written by human programmers. For instance, AI may suggest search results, generate information based on context, review documents, or route workflows to activities requiring human intervention, such as approvals. Additionally, AI can augment a database layer by generating queries dynamically based on context, without the need for hardcoding.

Key aspects of Agentic AI and Reference Architecture.

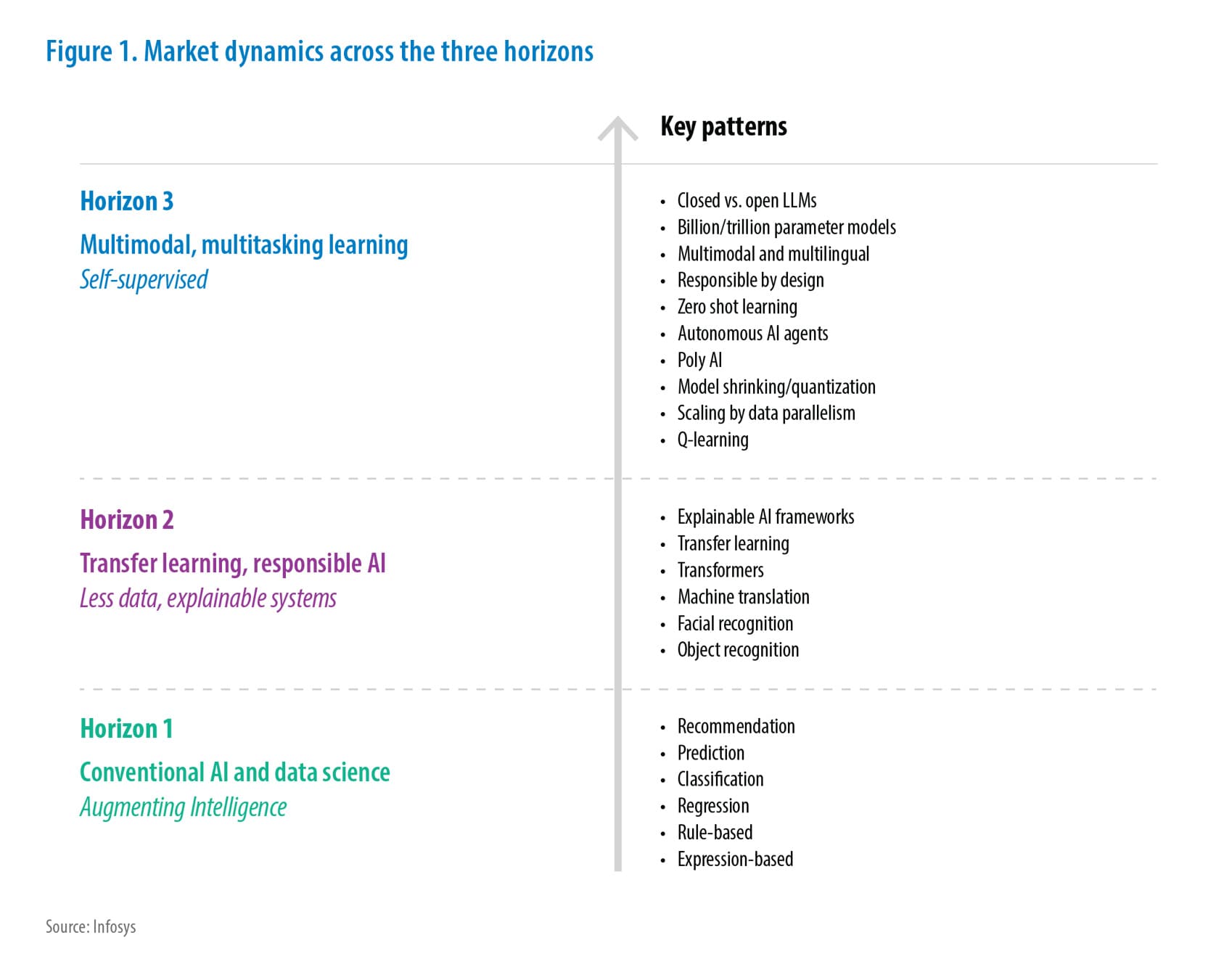

When developing software systems, AI can be leveraged in lot of different ways. Most popular choice is to have to use an AI-assistant based IDE such as GitHub Copilot, Cursor, Windsurf etc. These tools are excellent for helping developers to develop faster. But AI enabled software is not only about leveraging AI tools for software development, but also about how to make AI an essential part of software itself. This is where Agentic AI becomes an essential part of software development. An agentic AI enabled software system can be depicted as the diagram below:

Figure 1. Agentic AI enabled Software System

The diagram shows existing applications using AI capabilities via AI APIs, providing a loose coupling of AI features through well-defined interfaces. The AI API abstracts the Agentic AI workflow, which includes both traditional and AI components to execute system functions. Each Agentic AI abstraction hides various technology components, including established tools or emerging tool chains, as Agentic AI standards and protocols are still evolving and gaining attention from the software community.

The workflow orchestrator is a key component. It is the brain which orchestrates the execution of different agent enabled components and coordinate between them. There can be agentic components which can execute in parallel as well as in sequence. It is the job of the orchestrator to coordinate these tasks. Sometimes, there can be long running workflows which involve human agents as well. The orchestrator needs to handle such things by pausing the workflow by saving the current state, handing over to human agents to collect the feedback, resuming the workflow by providing human feedback information to the subsequent agents. This can also involve the usage of persistent storage like databases, file systems, cache etc.

When designing AI-augmented software, it is crucial to implement proper checks and conditions due to AI's non-deterministic nature. Carefully selecting use cases and incorporating a human feedback loop for critical decisions can help mitigate risks such as hallucinations.

There are other crucial design aspects to address as well, particularly those that could lead to legal issues. The importance of explainable AI cannot be overstated, as it provides clarity on the decisions made by AI systems, which is essential for agentic AI systems. Although explainable AI is still an emerging field, incorporating some form of decision transparency is necessary. There are two main components to this explainability:

- The first involves proper monitoring of Agentic AI, which entails explaining the queries and context provided to an AI model for a specific use case and detailing the AI model’s response.

- The second component is the ability to elucidate why an AI system made a particular decision.

The first part has matured in recent months with a lot of new tools and frameworks emerging. The second part is not yet fully matured, but AI model providers are building sophisticated systems to tackle this.

An essential aspect of an Agentic AI solution is the provision of appropriate context for any prompt sent to AI models. Traditionally, Retrieval-Augmented Generation (RAG) systems have been utilized to deliver essential context due to the limited context window size of Large Language Models (LLMs). However, contemporary models now feature significantly larger context sizes. While RAG remains relevant, applications can exploit these expanded context windows to supply more comprehensive context. Nonetheless, in complex software environments, handcrafting the required context for each use case from various dependent systems represents a challenging task. Each system must develop code to obtain this context, which often varies in nature and thus diminishes reusability.

Moreover, modern LLMs possess capabilities such as tool calling, which allows for the invocation of additional functionalities based on the LLM's response. This responsibility also falls upon developers, further complicating matters. This complexity underscores the importance of standards such as the Model Context Protocol (MCP). MCP addresses the challenges of context provision and tool calling by establishing a standard applicable to different systems. Consequently, nearly all software vendors are now developing MCP servers around their key data providers, enabling AI systems to leverage this information. Therefore, a standardized method of exposing resources and APIs as context, along with tools for any AI model, is crucial. MCP is emerging as the de facto standard for this purpose.

As previously explained, due to the non-deterministic nature of Agentic AI-based systems, establishing an evaluation framework is crucial. These frameworks facilitate the assessment of AI performance and accuracy, enabling us to provide feedback so that AI can be refined to produce more deterministic outcomes for specific use cases. This also raises the question of monitoring—can traditional monitoring solutions suffice for evaluating Agentic AI systems? The answer is partially affirmative; however, they are not entirely adequate. There are several additional aspects that need monitoring in Agentic AI solutions which are not present in traditional software ecosystems, such as model interactions, token monitoring, and model performance monitoring. Therefore, there is a need for specialized monitoring solutions tailored to Agentic AI.

Agentic AI systems can be designed as a system where a single agent does the work like in the case of a simple chatbot or having a multi agent system involved in multiple tasks executed in a distributed way either sequential or parallelly. This can become overly complex to manage. Hence it is essential to have an orchestrator in place for agentic AI solutions.

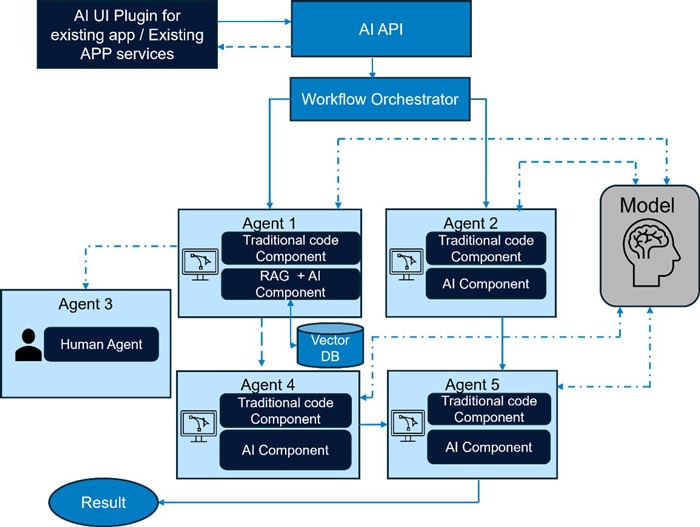

Considering all these aspects, we can define a reference architecture for an Agentic AI based system. This system can be integrated or augmented with traditional software components to build a truly agentic-based AI-enabled software solution. The reference architecture is as follows:

Figure 2. Agentic AI reference Architecture

The Key components of Reference Architecture are explained below:

User Interaction or Triggering Point

This is the Interface between AI Workflow and a User or other System. This can be a web application used by the user which requires an AI workflow invoked as part of its functional use case. It can also be caused by certain events that have happened inside any traditional software.

Orchestration Engine

Orchestration Engine is the Brain of the System, which coordinates and integrates different other components which are essential for the proper working of the solution. Orchestration engines usually have a well build workflow system, which can track and manage different tasks, Typically Orchestration engine does the planning execution and refinement task which need to be carried out by AI enabled agents. To Achieve its objective, it can leverage other core components like RAG, Security System, Context providers, Memory providers, Integrations with other systems etc. Also, another major part is proper integration with a monitoring system.

Human in the Loop – One Key characteristic of any orchestration framework is the ability integrate human in the loop capability; this provides human interface systems to integrate with Agentic AI workflow systems at different phases of the workflow to get human review and feedback on AI generated data. The flexibility and ease of integration of such capability should be a key aspect of any agency. Most often agentic systems can be long running systems. Hence the ability to keep response and context in persistence locations and the ability to continue execution after long time like hours/ days need to be considered a major feature in any framework’s evaluation.

Reasoning System

These are types of context provider where specific rules, best practices and RAG Components reside, this provides or constrains AI to take better decisions for a specific use case query.

Context Provider

This is to provide larger context, like access to file systems, API’s, Databases so that AI can get more context and environment in which a query occurs, this can be better implemented with MCP protocol which supports essentials capabilities to provide context. Apart from providing context, this also allows us to call APIs of other systems with the result of AI response, which will help to enable more automated integration with existing APIs.

Memory

Memory is an essential part of Agentic AI. There are two types of memory, short term memory and long-term memory. Short term memory helps to provide immediate context for AI interaction especially when building a chatty integration with LLM’s. Long term memory can be leveraged for information which is required to be searched and used for longer workflows or across use cases, information specific to domain etc. Key characteristics of short-term memory are:

- Ephemeral: Exists only during the current task or session.

- Size-limited: Constrained by the context window of the underlying model (e.g., 8k, 32k tokens).

- Fast access: Quickly accessible and updated in real-time.

- Content: Stores immediate information like the current conversation history, instructions, intermediate reasoning steps, recent tool outputs, etc.

- Volatile: Lost when the session ends unless explicitly written to long-term memory.

Typical characteristics of long-term memory are:

- Persistent: Retained across sessions; survives agent restarts.

- Scalable: Can grow indefinitely (depending on storage).

- Slower access: Retrieved via search, retrieval-augmented generation (RAG), or embeddings.

- Content: Stores past interactions, facts, documents, user preferences, past plans, or completed tasks.

Learned knowledge: May include experience from previous task execution.

Security and Compliance

As in any traditional software solutions, having proper security and access control is essential for Agentic AI solutions as well, existing standard solutions or tools can be leveraged for providing authentication and authorization. Like Oauth, Open Id, and policy-based Authorization solutions can be leveraged. AI enabled Conditional access is another type of access control one can leverage, which can enhance policy-based authorization mechanism to be more dynamic in nature. In addition to standard tools, AI specific tools can be leveraged to restrict the output schema of AI models to align with any compliance, avoid bias and get more deterministic responses.

Monitoring

Monitoring Agentic AI is slightly different from traditional monitoring tools, hence, there is a need for specific tools for Agentic AI software monitoring. These monitoring tools can provide insights on what is being sent to AI Models, what is the response from the Models, how much tokens it generated, what is the latency, what is the cost etc. Also, some of the tools provide some evaluation capabilities as well, which will help to improve the prompting techniques used for agentic AI system so that the system performs more accurately. Traditional Monitoring solutions can be augmented with Agentic AI specific monitoring solutions to get proper insights into the application.

Evaluation

Any agentic solution needs to have a proper evaluation technique so that the system can be improved to provide better responses for specific context, this will help to avoid hallucinations and build a more robust system. There are two types of evaluation, Automated and Human evaluation can be leveraged for more critical systems for initial stages, Automated tools can be leveraged to provide automated evaluation integrated within the agnatic software solution.

Deployment

Agentic Software solutions can be deployed using a standard deployment model, since the agentic components are typically hosted in standard software processes. For example, most agentic frameworks are built with python and hence Fast API is a good hosting process for agentic components, hence, the solution can be deployed in a containerized environment like K8S and Cloud.

Comparison of Major Agentic AI frameworks

There are few major frameworks which are becoming front runners in providing the required capabilities to build an agentic AI solution. The following section provides a brief comparison of three major frameworks and benefits of each such frameworks.

| LangGraph | Crew AI | LlamaIndex Workflow |

|---|---|---|

| LangGraph is a powerful framework designed for building stateful, multi-step AI applications using graph-based workflows. It enables developers to define AI agents as directed graphs, where each node represents a step in the reasoning process—such as calling a language model, invoking a tool, or decision making—and edges represent possible transitions based on the outputs. LangGraph is particularly well-suited for agentic systems that require memory, tool usage, and conditional branching. It integrates seamlessly with LangChain, allowing complex agent behaviors to be composed in a modular and interpretable way, making it ideal for applications like chat agents, autonomous workflows, and evaluation pipelines. | Crew AI is a framework for orchestrating collaborative, multi-agent systems, where multiple AI agents—each with specialized roles—work together to complete complex tasks. Inspired by real-world team dynamics, Crew AI enables developers to define agents with distinct capabilities, assign them to roles, and coordinate them through structured workflows. Each agent can use tools, maintain memory, and interact with others through message passing, making the framework well-suited for scenarios that require division of labor, role-based reasoning, and parallel execution. Crew AI simplifies the development of agentic teams for use cases like research assistants, business automation, and collaborative problem-solving environments. | LlamaIndex Workflow provides a structured approach to building retrieval-augmented generation (RAG) and agentic systems by combining data indexing, query planning, and tool integration within a unified framework. It allows developers to define multi-step workflows where language models interact with structured data, tools, and external APIs. Unlike LangGraph, which emphasizes graph-based control flow, or Crew AI, which focuses on team-based agent orchestration, LlamaIndex emphasizes context-aware reasoning over data sources, making it particularly powerful for knowledge-intensive tasks such as document QA, research assistants, and analytics bots. Its built-in planner-executor architecture supports dynamic decision-making while maintaining a strong connection to underlying data representations like vector stores and indices. |

In summary, if we need to build a quick agentic application with lot of abstraction Crew AI is a good framework, it abstracts several complexities under the hood. But that makes it less suitable for building complex applications which require very fine-grained control from the development team like complex custom prompts, complex flows involved complex routing rules etc. Both LangGraph and LlamaIndex shines in those aspects.

Since LangGraph is based on directed graphs, it can be leveraged to build very flexible multi step logic which involves multiple steps like assistants, planners, and executors, it also has excellent support for decoupled human feedback loop. The LlamaIndex workflow on the other side provides simpler workflow based on events without using complex graph-based model and has inbuilt support for RAG (Retrieval Augment Generation), but it lacks in-built state handling mechanism. In a nutshell, use cases were built in RAG capabilities are required and there is no need for very complex muti agent control flow, LlamaIndex is a good candidate, it is a little more opinionated framework, but shines in building fast data augmented apps with a lot of in-built data connectors available. But to build an overly complex multi model, multi agent workflow which require complete flow control LangGraph is the better framework. But all these frameworks are getting better over time. Also, there are other frameworks entering mainstream as well. Hence a proper evaluation based on the use case is advised for any professional development.

Lessons Learned from Practical Implementation

Leveraging the key capabilities specified in this paper, we have built an agentic AI solution for SDLC Automation. The major considerations while building this system are as follows:

- A decoupled user interface (UI) that can be extended or rewritten in any future technologies.

- Standardized communication mechanisms from the UI to the Agentic System.

- Configuration capabilities to modify prompts without altering the underlying agentic code.

- The ability to provide human feedback on AI responses before generating the result.

- Fine-tuning functionalities for enhancing results with AI assistance.

- Utilization of a standard framework for regular Agentic capabilities such as orchestration, evaluation, and monitoring.

- Persistent workflows that allow the pausing and restarting of use case flows at any point in time.

- Abstraction of LLM calls to prevent direct use of any LLM provider’s client library, facilitating the change of the underlying LLM with minimal code modifications.

- Deployment of applications using standardized methods such as containerized deployment.

- Leveraging mature, open-source frameworks supported by an active community.

We have heavily used the LangChain ecosystem, specifically LangGraph as the orchestration platform. LangGraph allows us to build state machines and workflows using standard graph-based systems while controlling prompts. It also supports human feedback within Agentic AI solutions, enabling decoupled human touchpoints. Additionally, LangGraph facilitates long-term workflows, where activities can be resumed at any time, providing flexibility in system design.

Integration with a monitoring solution is crucial. Langfuse, an open-source tool supporting LangChain and LangGraph, effectively monitors agentic AI systems and tracks user sessions for isolating traces. Besides automated tracing tools, implementing proper logging at different phases of the agentic flow helps troubleshoot issues like in traditional software systems. A distributed logging system like ELK is essential for this purpose.

Hosting was another major consideration as we aim to use standard frameworks for hosting. Python, being the default choice for agentic AI frameworks, led us to select Python Fast API for hosting agentic flows. It facilitates simpler and faster development, and most agentic AI frameworks are implemented in Python.

When building agentic solutions, it is beneficial to conduct multiple rounds of evaluation by adjusting prompts. Leveraging prompt templates is a recommended practice, and having formatted responses aligned to a specific schema is also important. Frameworks like LangChain provide out-of-the-box support for this through the tool calling feature, so selecting models that support tool calling is necessary. Another important aspect is implementing a proper retry mechanism to fine-tune or correct any errors in parsing the response. Models may not provide the response exactly in the expected format on the first invocation, so having a retry mechanism with a feedback message detailing the exact error will help improve subsequent responses from models.

The context window size is an important factor in model interaction. Although it varies depending on the model, technologies such as RAG with summarization techniques can be utilized to provide appropriate context within this window. Additionally, implementing MCP servers near key data sources can offer context, increase reusability, and minimize overhead and troubleshooting over time.

One of the major design considerations which have been taken is having an asynchronous communication pattern. Unless it is a chatty application, having an asynchronous communication mechanism leveraging Queues or Publish subscribe model is best suited for agentic AI solutions. There are multiple factors driving this, like

- Workflow systems are typically asynchronous.

- AI model responses can be slow, have errors, formatting issues, and need retries. An asynchronous design pattern is ideal in these cases.

For providing human feedback, different frameworks support various models, but architectural solutions should consider a model that offers the flexibility to capture human feedback from a decoupled solution such as a UI application. Therefore, the ability to pause and restart the workflow at any point in time is important, and this flexibility should be a key criterion in evaluating any framework. LangGraph provides an interrupt-based model where the activity of a workflow can be paused and resumed later, offering flexibility. LlamaIndex workflow is another framework worth considering for this purpose. It is also possible to implement custom database-based solutions. Alternatively, instead of using tools like LangGraph and LlamaIndex workflow, one can build an agentic system with custom agents using standard workflow solutions such as Airflow and native AI APIs like the OpenAI client library or tools like LiteLLM. This approach requires more effort but may provide complete flexibility in all aspects.

Apart from human feedback, any agentic AI based system should have a properly designed feedback mechanism for fine tuning or correcting responses from AI. Often AI may respond with data that might not be in line with expectations, especially in format. Take the case of code generation for different frameworks, the expectation might be to have all the required files in specific format. One can fine tune the prompt to have proper formatting and expected contents, But AI may not always adhere to this. The solution is to have proper retry and error correcting mechanism involved. This should be automatic in nature where an AI response verifier can be built and if the response is not in line with the expected format or AI response does not contain the expected contents, Inform the AI back about the error details. This can be done in different ways in different frameworks. One can use an exception handler block for AI calls to catch any parsing errors of the AI response and give the feedback back with additional information about the error. Frameworks like LangGraph can be used to introduce a verification node to verify the results.

A significant lesson learned is to adhere to the Python ecosystem for developing agentic workflows. Although there are frameworks such as Semantic Kernel that enable AI-driven software development in other languages, the most established and mature frameworks are developed in Python. Therefore, it is advisable to leverage Python as the foundational standard for any agentic AI-based software development.

Conclusion

This paper emphasizes the integration of traditional software engineering practices with modern AI techniques to build AI-enabled software systems. It introduces a reference architecture for agentic AI-based solutions, highlighting essential components like the Orchestrator, Memory, Monitoring and Evaluation, and the MCP. The discussion includes key frameworks and tools that support the development of intelligent systems capable of autonomous decision-making and self-improvement. Practical guidance for engineers is offered, focusing on leveraging the Python ecosystem for creating adaptable and intelligent software architectures.

References

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!