Artificial Intelligence

Harnessing Generative AI (GenAI) for Modernizing legacy ERP applications with Reverse Engineering

Many companies use Oracle JD Edwards (JDE) World for Enterprise operations, and supporting the application is quite challenging for variety of reasons like End of Standard Support, Outdated technology, limited training, and documentation resources etc. When key skilled professionals leave the organization, the loss of their expertise creates a knowledge gap, making it difficult for the organization to find adequate support. Imagine if we had a feature that could instantly create comprehensive user manuals with just a click!

This paper explores how Generative AI can revolutionize the creation of techno-functional documentation by automatically analyzing the code base. With this approach the Organization can hugely benefit to generate documentations with their code base, without relying on any skilled technical resource.

Insights

- Utilizing the GenAI LLM model (e.g., Azure OpenAI) and advanced prompt engineering techniques, and upon specifying a documentation template, this system generates detailed functional mappings and descriptions for Oracle JD Edwards World (RPG 400 and CL Programs).

- This involves pre-processing JDE code to parse and extract business logic, which is then used to create specific prompts for generating the target components and templates.

- This innovative approach not only improves efficiency but also quality of specifications addressing the challenges faced in modernizing legacy ERP Applications.

Introduction

Maintaining outdated systems presents numerous challenges. The scarcity of professionals skilled in these systems drives up support costs. Additional issues such as integration limitations, security and compliance risks, and scalability further complicate maintenance efforts.

A significant challenge is the shrinking pool of experts in legacy technologies. For instance, a survey revealed that 71% of IT leaders struggle to find developers proficient in legacy languages like COBOL. Older systems are also more prone to failures and downtime, with studies indicating they experience 60% more downtime compared to modern systems.

This whitepaper focuses on Oracle JD Edwards (JDE) World releases, which run on the AS400 platform and face similar support and maintenance challenges. However, leveraging Gen AI can greatly modernize JDE World applications.

Business Benefits

- Reverse Engineering: Gen AI can automatically analyze legacy code to identify its structure, dependencies, and business logic. This aids in understanding the system's operation and determining necessary changes for modernization.

- Code Refactoring: Custom components can be reorganized to make them cleaner and more logical by eliminating redundancies. This reduces complexity and improves performance without altering business functionality.

- Business Rules Extraction: Gen AI can extract business rules from custom code, making it easier to understand the written logic. This is crucial for further modernizing the system without changing its functionality.

- Documentation Generation: Gen AI can generate techno-functional documentation by reading the code. This assists technical developers in enhancing or implementing new functionalities.

Reverse Engineering using Azure Open AI LLM

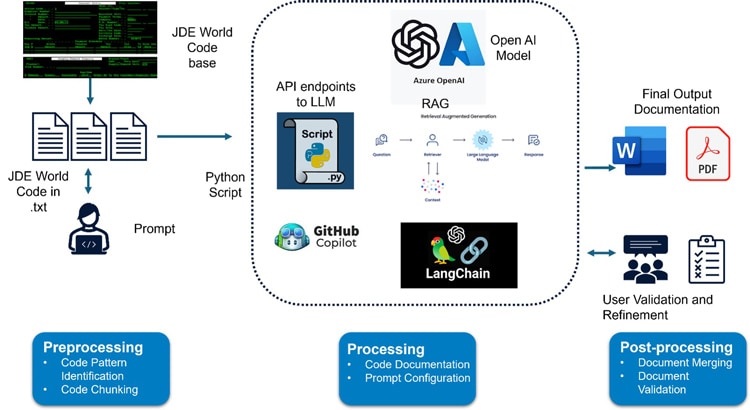

The typical process involves using reverse engineering techniques to extract business logic from JDE code, as illustrated below.

Figure 1. Reverse engineering process to extract business logic from JDE code

Develop a process to extract JDE World code from program libraries into .txt files. Once these text files are uploaded to the AI processing area, Gen AI LLM (specifically Azure OpenAI in this document) parses the AS400 code and generates business rules. It employs prompt engineering techniques to create techno-functional documentation based on the input code and other JDE reference documents/user guides.

- Automated Code Parsing: Azure OpenAI LLMs can parse and analyze the existing JDE World codebase to understand its structure, dependencies, and business logic. This involves identifying key components, functions, and data flows within the system.

- Natural Language Summarization: The LLMs can generate natural language summaries of complex code segments, making it easier for developers to understand the functionality and purpose of distinct parts of the application.

- Pattern Recognition: Gen AI models can identify, and extract business rules embedded within the code by recognizing patterns and logic structures. This helps in documenting the business logic that drives the application.

- Rule Documentation: The extracted business rules can be documented in a structured format, providing a clear understanding of the system’s operational logic.

RAG

Retrieval-Augmented Generation (RAG) is a powerful architecture that combines the capabilities of a Large Language Model (LLM) with an information retrieval system to enhance response generation.

- User Query: A user submits a query or prompt.

- Data Retrieval: The query is sent to the information retrieval system (e.g., Azure AI Search), which finds the most relevant documents or data.

- Response Generation: The retrieved data is then fed into the LLM, which uses it to generate a detailed and accurate response to the user's query.

Key Components of RAG:

- Information Retrieval System: This system retrieves relevant data from a vast collection of documents, databases, or other sources. In Azure, this is often implemented using Azure AI Search, which indexes, and searches through your content.

- Large Language Model (LLM): The LLM, such as GPT-4, processes the retrieved information and generates a coherent and contextually appropriate response. The LLM uses the grounding data provided by the retrieval system to ensure the response is accurate and relevant.

LangChain

LangChain is a framework designed to help developers build applications using large language models (LLMs) like those provided by Azure OpenAI. It simplifies the integration of these models into various applications by offering tools and components that streamline the process.

When using LangChain with Azure OpenAI, you can leverage the same API calls as you would with OpenAI's API. This compatibility makes it easier to switch between or use both platforms.

API Configuration: You can configure the OpenAI Python package to use Azure OpenAI by setting environment variables for the API version, endpoint, and API key.

Authentication: LangChain supports both API Key and Azure Active Directory (AAD) authentication methods.

The AI-generated output will be fragmented, corresponding to the chunks of code it processes. These contents are then consolidated using a Python script that employs the python-docx library to create a Microsoft Word document with headings and titled sections.

Conclusion

AI-generated documentation will not be entirely complete. It will need review, validation, and refinement to achieve the desired accuracy and detail. Once subroutines, program coding formats, and JDE Data dictionaries are explained using prompt engineering, the resulting AI-generated document will be valuable for further study, review, and understanding.

References

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!