Artificial Intelligence

Artificial intelligence (AI) is accelerating through transformative breakthroughs in agentic systems, multimodal processing, and frontier cognitive architectures — innovations that are reshaping the enterprise landscape as we know it. What began as a promising experiment has now matured into demonstrable business impact.

Enterprise AI architecture and technology: Designing the autonomous future

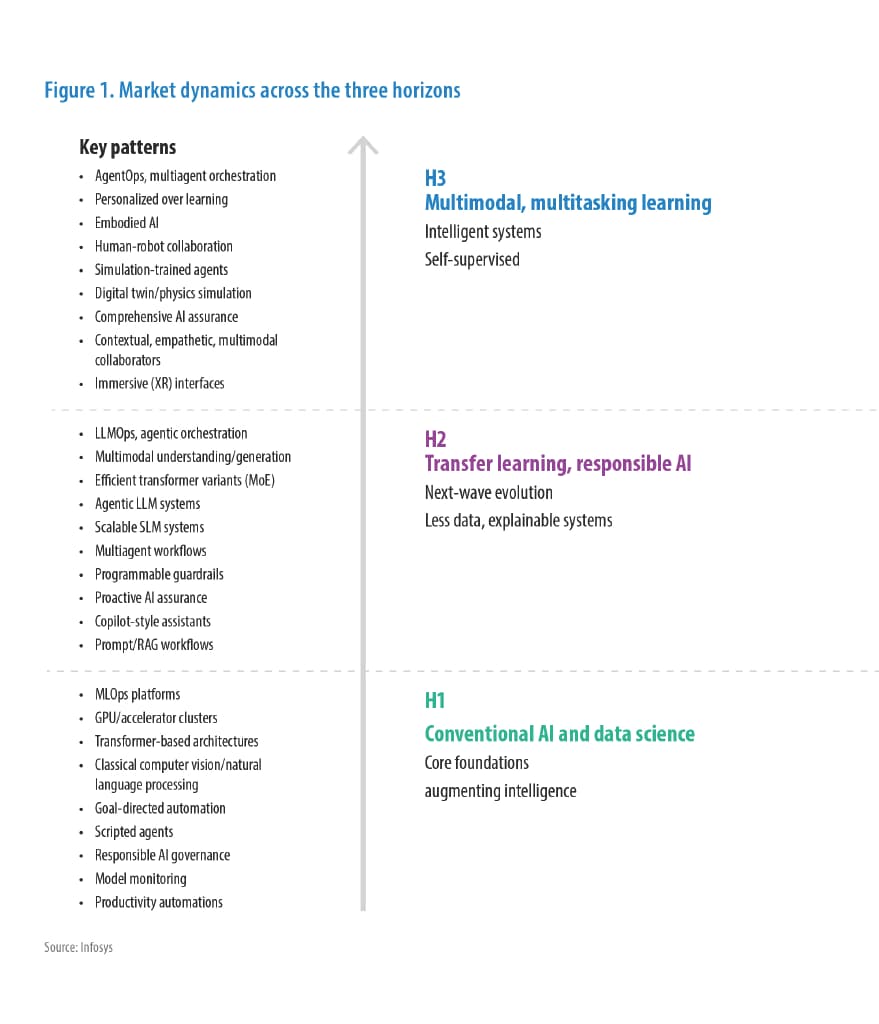

H3

Multimodal, multitasking learning

Intelligent systems, Self-supervised

Key Patterns

- AgentOps, multiagent orchestration

- Personalized over learning

- Embodied AI

- Human-robot collaboration

- Simulation-trained agents

- Digital-twin/physics simulation

- Comprehensive AI assurance

- Contextual, empathetic, multimodal collaborators

- Immersive (XR) interfaces

H2

Transfer learning, responsible AI

Next-wave evolution, Less data, explainable systems

H1

Conventional AI and data science

Core foundations, Augmenting intelligence

Key trends across AI subdomains

Trend 1

AI platforms become smarter, specialized, and multimodal

Trend 2

Autonomous, agentic AI platforms reshape enterprise operations

Trend 3

GPU-as-service emerges as the new infrastructure model

Trend 4

Alternate hardware drives cost-efficient AI interence

Trend 5

Smaller language models gain relevance

Trend 6

Holistic intelligence becomes AI's next frontier

Trend 7

Robotics evolves into adaptive partners

Trend 8

Shift to efficient and specialized architectures

Trend 9

Move from reactive processing to proactive, anticipatory behavior

Trend 10

AI makes cognitive leap from execution to reasoning

What's New

Latest White Papers, Tech Blogs and View Points

Subscribe

To keep yourself updated on the latest technology and industry trends subscribe to the Infosys Knowledge Institute's publications

Count me in!